Digital sovereignty has quickly become one of the most pressing policy issues in Canada. From the U.S. Patriot Act and Cloud Act to the risks of foreign ownership of critical infrastructure, Canada finds itself increasingly exposed in ways most citizens never see. Government records, health data, infrastructure models, and geospatial systems are often stored on foreign servers — and even sensitive public-sector systems, such as those used by the Department of National Defence, have been migrated to U.S. cloud providers . These choices raise fundamental questions about control, security, and the country’s ability to protect its own information.

Despite these risks, Canada has yet to clearly define what sovereignty should mean in the digital age. The result is a fragmented landscape: privacy rules that remain weak, consumer protections that are almost absent, and data-sharing practices that vary across provinces, institutions like hospitals, and agencies at all levels.

To explore what data sovereignty really means for Canada, we sat down with Tracey Lauriault, Associate Professor of Critical Media and Big Data at Carleton University and an expert on data governance. In this wide-ranging conversation, she discusses the gaps in Canada’s current approach, lessons from Scandinavia and the EU, and why sovereignty must be understood not only as a technical issue but as a political, economic, and ethical one.

Excerpts:

To start with, what exactly do we mean by data sovereignty?

There are different definitions. There’s also digital sovereignty and then data sovereignty, and they’re intertwined.

Digital sovereignty is about the infrastructure we control — the systems that produce and store data, and whether we have autonomy in producing the technologies themselves. One way to think of it is Tesla during COVID: they didn’t have to rely on anyone else for chipsets or technology because they produced it all in the United States. That’s digital sovereignty.

Digital sovereignty means having autonomy over the full production cycle of tools, technologies, and infrastructures. Canada doesn’t do chip manufacturing anymore (though we did at one time), but it would be worth asking what it would take to bring some of that capacity back. At the very least, we could ensure that Canadian companies, including Crown corporations, control cloud computing and data centers in Canada, and implement geo-fencing to ensure information stays in Canada.

And that leads into the data conversation. Data sovereignty is about using digital sovereignty to manage data within Canadian borders — to keep it safe, to capitalize on it responsibly, and to ensure management is fair, trustworthy, and equitable.

Why is data sovereignty such a critical issue for Canada right now?

Because we don’t really have strong data protection laws. We have privacy rules, but they’re meager and out of date. They don’t protect us in terms of civil liberties, against misinformation, or against data brokers. We also don’t have consumer protection laws that cover how data is collected, sold, or reused.

Indigenous data sovereignty is part of this, too. It means First Nations, Métis, and Inuit govern their own data according to their own principles — FNIGC’s OCAP, or others — with the right, roles, and responsibilities and technical capacities to manage their own infrastructures. That can be negotiated with the Crown, provinces, territories, or municipalities — alternatively, First Nations, Metis, and Inuit-owned and -operated systems and infrastructure.

In all cases, sovereignty means having control, access, ownership, and possession of data within your borders, as well as negotiating access practices with others. That is no different from how a company controls its own data.

Sovereignty doesn’t mean closing access. You can have sovereignty and openness at the same time. It just means setting conditions, through licenses, on who can use the data and for what purposes. Right now, many federal and provincial datasets are open to the world with no restrictions. What would it look like if U.S. or European data brokers needed special permission or they paid a fee to access and capitalize on our data?

The dimensions are legal, infrastructural, ethical, Indigenous, and economic. Sovereignty isn’t just technical; it’s political and economic too. Think of the U.S. Patriot Act: it lets ICE access Canadian data. And when U.S. laws are weakened, there’s no guarantee Canadian data will be protected. That’s a data sovereignty issue.

RELATED READ:

- Canada Can No Longer Pretend Digital Sovereignty Isn’t at Risk

- Canada Has an AI Strategy. Why Not a Geospatial One?

What about the U.S. Cloud Act, which allows American authorities to access data from U.S. companies, even if it’s stored in Canada?

The question is how they enforce that if the data is here. Would Amazon or Microsoft refuse? Probably not. Because the U.S. has become a technocracy, or what some call a “broligarchy”. Nobody’s following laws or regulations right now anyway. It’s all a big grab at this point in time.

So, what ought we do? Ought we not at least be able to gather our top cybersecurity, cloud computing, and data center experts in a room and have them figure this out? That’s what the U.S. National Academies and the National Science Foundation do. They gather people, put them in a room for a week, feed and water them, and by the end of the week, demand a series of recommendations for a strategy.

We don’t do that. But that’s what we need. We can’t leave this to the government alone — they’re not really capable of doing this on their own. And the 30-day consultation with experts for an AI strategy is limited at best. We need a conversation between the private, public, and nonprofit sectors on how to protect the economy, Canadian citizens, the data, and companies, and maybe even develop a strategic advantage. We are all citizens, and these actors ought to act as technological citizens with rights and responsibilities to keep us, our data, and technological systems safe!

There’s a reason people store data in Iceland or Sweden: because they’re safe. Why can’t we become that safe place?

Do you think the Canadian government has a coherent strategy for data sovereignty?

They may have some policies for government data — health data, for example. There was a time when storage had to be in Canada. I haven’t looked recently to see if that’s still true.

Think of GenAI. Canadian open data could easily be pulled into a U.S. repository and used in corporate AI projects. Do we want Statistics Canada data in someone else’s GenAI project?

My understanding is that the government keeps some health data and StatCan data here. But there’s no law or regulation making it essential for companies to do the same. We have very little consumer protection, and probably very little corporate protection, over how Canadian data is produced and reused, whether by Canadian companies, Canadian citizens, governments, or nonprofits.

Beyond public service data and privacy law, nothing governs these other ethical concerns and malfeasance; laws have not caught up.

What do you see as the biggest policy gaps?

We need consumer protection — data brokerage companies are a concern. We need cybersecurity protection. We need a national digital strategy and regulation that is more encompassing and systems-based.

Think of digital twin data or building operations data. Companies generate sensitive data about heating plants, electrical plants, etc. If those sensors feed to a U.S. company, then the U.S. government has access to very sensitive Canadian infrastructure data. From a cybersecurity perspective, that’s a serious problem.

When we think about sensors, digital twins, and smart cities, it matters who you contract with and where they store your data. Most conversations at technology conferences are primarily technical, including scholarly papers and grey literature. There’s almost no discussion of governance, ownership, control, access, possession, ethics, or sovereignty.

They are all aspirational: “Smart tech will solve climate change. GenAI will solve global problems. Digital twins will solve everything.” None of that is true. Governance and people issues aren’t being discussed, let alone environmental sustainability.

Procurement is another example. If I were the government or a university like Carleton, my contract should stipulate that the data stay here. But do people know they can do that? I think there’s a fundamental disconnect between ideals and practices.

Take MS Teams, which we’re using now. Where are those data? Not here. Autodesk stores its design data in the U.S. — that’s all strategic information. Is there a guarantee they won’t be transferred? Think of subsurface data infrastructure or digital twins of that — that’s nation-scale strategic cybersecurity information. If it goes to the U.S., there’s no protection.

We need to think collectively about what is good for Canadian companies, citizens, and the nation’s cybersecure future. Cybersecurity is strategic, and we’re not discussing it enough.

Should private, nonprofit, and academic organizations be required to follow the same hosting norms as the public sector, to keep data in Canada? Is that feasible?

Why not? That’s a question I don’t know the full answer to. I haven’t done an inventory of all the data centers and cloud facilities in the country. But certainly, we could. Certainly, we are building some. With the new infrastructure funding, especially in AI, we definitely need more powerful compute environments because our current ones are not very strong. That would be really useful.

Will it be strictly Canadian or somewhere else? That’s the question. But is it possible? Yes, it’s possible.

We know that Sweden, Norway, Denmark, Greenland, and Iceland know how to do it. Those countries are smaller than us, with fewer people, and they can do it. Why can’t we? To me, nothing is impossible. We just need to make the strategic decision to do it, and then do it.

Why does Canada struggle with data sovereignty, and what international models could it learn from?

In the Scandinavian countries, for instance, they have a very different mindset. They are techno-politically astute. We, in Canada, still behave like a colony. We let everybody take stuff, sell cheap, pull stuff out of the ground, provide cheap leases, and we outsource production, and those who try to do otherwise are not well supported by the government to stay.

We still behave as an extractive economy. We don’t make national strategic decisions on our own, although the recent tariff issues are prompting us to refocus our efforts and realize that we have capacity and potential.

In the Scandinavian countries, they don’t have a problem making those decisions. They understand what technological sovereignty means. They have the capability, the knowledge, the engineers, and they consider it a social function.

For us, it’s always haphazard — random committees, temporary working groups, abysmal consultations — but not embedded in our DNA. And it’s not in the DNA of our companies either unless they must adhere to EU GDPR and other standards to access those markets.

In Scandinavian countries, are companies competitive? Yes. Are they entrepreneurial? Yes. Do they want a strategic advantage? Yes. But they also understand social well-being and techno-political well-being, and they are supported by their governments.

In Canada, as long as it’s good for my company, that’s enough. But companies rarely come together to say: “We, as Canadian companies, are going to build this infrastructure, develop these laws, and exert sovereignty. We are going to make Canada the place where digital and data sovereignty is real.”

If we want to change, it may have to start from the bottom up. Companies, industry associations, and collectives could demand and build sovereignty, and the government could then support them. We can’t always expect the government to do everything for us.

As for models, Canada should look to Scandinavia, to the EU’s GDPR, maybe even Singapore. Forget the U.S.—they’re dismantling institutions like NOAA. China exerts control, too, but in its own way. Scandinavia and the EU are where the real lessons lie.

Who actually owns Canada’s most important datasets — land, health, or even space data?

Ownership is fragmented, and that’s the heart of the problem. The land registry always angers me — citizens have to pay for their own land data because registries were outsourced to private companies like Teranet. That’s colonial. Land registries and cadastres should be open data. Canadians should know who owns Canadian land — and we’d probably find a lot is foreign-owned.

Health data are also fragmented. It sits with provinces, hospitals, and CIHI [Canadian Institute for Health Information] — not Health Canada. Doctors are private businesses billing provinces, hospitals are nonprofits, and data sharing is messy. Even within Ottawa, one hospital system doesn’t connect to another. That’s absurd.

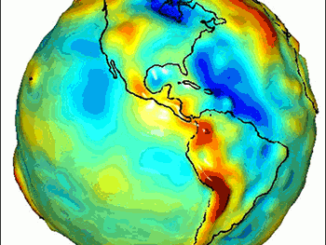

Space data isn’t much better. Usually, the satellite provider owns it, and the government gets access rights. Take Radarsat: MDA owned it, with agreements to provide CSA or CCRS [Canada Centre for Remote Sensing] with access. That’s a licensing arrangement, not ownership. I remember when Harper’s government stopped the sale of Radarsat to Lockheed Martin; the selling of our eyes in the sky to the U.S. would have been a loss of sovereignty. If the U.S. had owned Radarsat, it could have shut off our access entirely. Even icebreakers in the North would have been affected. That decision was the right one, especially in the current political context.

As for ownership, if it’s a government satellite, then CSA or CCRS probably manages the data, but that depends on the deal. Private companies don’t have to share unless they’re funded or under agreement. GEOSS is one model where they share for humanitarian reasons, but there’s no general obligation to do so.

At the end of the day, sovereignty should rest with the Crown — whether through CSA, CCRS, or NRCan — but the arrangements remain fragmented.

I wish we could overcome this balkanized federated approach and find a way to benefit from these assets strategically, cyber-securely, and in the public interest. Historically, Canada has failed to do this. It’s time to stop thinking in colonial ways and start thinking strategically, collectively, and as a nation-state for our collective well-being. We should also take down local jurisdictional barriers to self-interested collaboration.

With ownership so fragmented, how big a challenge is data sharing?

It depends on the type of data, the level of aggregation, the details, the timeliness and etc. We have open data portals in most provinces and territories, but they don’t contain everything and are not always at the required level of timeliness.

For geospatial data, there’s the Canadian Council on Geomatics (CCOG) Accord. Since 1999 or 2000, provinces and territories have had agreements to share some geospatial data. But not for all data, for example, not social, economic, health, and small jurisdictions and communities. Canada has a balkanized system. Just like we have weird tariffs between provinces, we have weird data-sharing gaps and a lack of interoperability across sectors.

COVID made this clear: health data sharing was a mess. There was no national scale framework data file for health zones, for instance, as that was the purview of the provinces and not PHAC nor the CCOG. Also, my file in Ottawa should be accessible if I end up in a Vancouver hospital, but it isn’t. Even in Ottawa, one hospital system doesn’t connect to another. That’s ridiculous.

Education, transportation, social services — again, all provincial or territorial. Everything is fragmented.

Does Canada need an overarching body, like the U.S. National Geospatial-Intelligence Agency or the UK Geospatial Commission, to coordinate data strategy?

Yes, we do. In the U.S., agencies like NGA, USGS, NASA, and, until recently, NOAA have played coordinating roles. Canada doesn’t have that kind of structure. We have different federal departments, replicated to some extent at provincial and territorial levels, serving different sectors, and they do not coordinate activities. That would be the work of a well-functioning geospatial data infrastructure, not one that only serves one department, one that serves them all.

Natural Resources Canada built the Canadian Geospatial Data Infrastructure (CGDI) with a roundtable of director generals from different departments, but it remained land and environment-based. The Treasury Board also had an open data team, but it is tiny and marginalized.

What we really need is a roundtable of chief data officers from various departments, facilitated by an entity like a secretariat, to meet, share, and coordinate. And it’s not just the usual departments — defence, health, natural resources, environment, transportation — everyone with strategic data should be at the table, including social, economic, and, of course, health.

We actually had these conversations in the early days of open data, from about 2000 to 2010. But we seem to have forgotten. Right now, everything is scattered — StatCan does its own thing, NRCan does its thing, Environment Canada does its thing, and Canada Post does its own thing, although there are more geospatial datasets available as open data than those from social, economic, and health sectors. It is one thing to have these accessible, but we ought to govern their access and use for our well-being, which brings us back to issues of sovereignty and rights.

Canada Post is a perfect example of the problem. They treat the postal code file as proprietary, even though it should be a national dataset. The same goes for the land registry. I’m not against companies making money, but you can still share data and make money.

Do you think we should classify all data as “critical infrastructure”?

I am not sure. One worry I have is that if we start calling everything “critical infrastructure,” the reflex may be to lock them all down again. That would take us right back to the pre–open data days. The challenge is to find a way to recognize the strategic value of these datasets while still keeping them open and usable in the public interest.

What are the consequences of not having coordination across departments?

We don’t even know ourselves anymore. We don’t have an atlas of Canada, so young people cannot see themselves in their own territory. People thought open data would solve this, but open data helps experts, not a grade-five student. We need intermediate artifacts to explain: Where are the forests? Where are the farms? Where are the mines? Who owns the land? Where are the electoral districts? These are basic questions citizens should be able to answer and see the patterns.

At the end of the day, it’s the taxpayers’ funding all of this, so the public should benefit directly. At events like GeoIgnite and other technical geo associations, the public good is not the mainstay of the conversation. Instead, it is innovation, which is great, but ultimately, it is innovation that affects us. Should not all land-based decisions be in the public interest? That’s just common sense—imagine a proactive disclosure on foreign ownership and the financialization of agriculture and housing. If we could see what and where, we might begin to realize why we have little control over our resources and assets.

Canada used to be innovative in terms of geospatial data infrastructure. We could be again. Carleton’s CIMS lab is one example — they’re building open-source digital twin tools, which is innovation for the public good. However, it is just one small example that could interoperate with a national system like the Arctic Spatial Data Infrastructure. We can still profit, just differently: in ways that don’t undermine sovereignty or the collective good — and perhaps that is the role of a geospatial data infrastructure that serves all sectors at all levels of government for the public good, one that is governed, safe, secure, and sovereign in the full life- and production cycle of our data.

Be the first to comment