Several indicators have been used as evidence of global warming including atmospheric CO2 concentration, surface temperature, sea level rise, arctic ice extent, land ice, antarctic ice volume, and others.

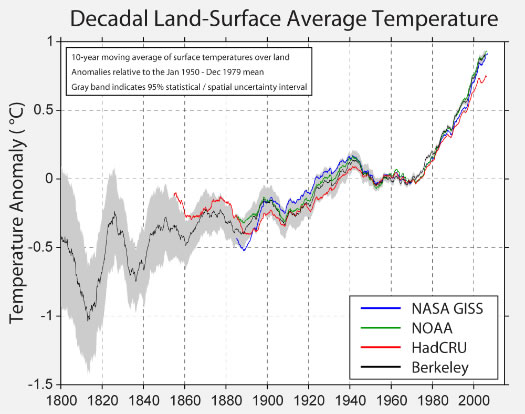

According to the Berkeley Earth Surface Temperature project, the most important of these is the land and sea surface temperature record. Three groups NASA Goddard Institute for Space Studies (NASA GISS), NOAA, and the Hadley Centre of the UK Met Office (HadCRU) have reported measurements of the change in Earth’s surface temperature for varying periods. These results have been criticized in several ways especially with respect to the choice of stations and the methods for correcting systematic errors.

The Berkeley study set out to to do a new analysis of the surface temperature record in a rigorous manner that addresses these issues. The study’s objectives are,

- Merge existing surface station temperature data sets into a new comprehensive raw data set

- Review existing temperature processing algorithms for averaging, homogenization, and error analysis

- Develop new statistical methods to remove some of the limitations present in existing algorithms

- Create and publish a new global surface temperature record and associated uncertainty analysis

- Provide an open platform for further analysis by publishing our complete data and software code

The Berkeley group has developed a new mathematical framework for producing maps and large-scale averages of temperature changes from a spatial network of weather station data. The new framework allows short and discontinuous temperature records to be included in the analysis making it possible to include data that in previous analyses were excluded. The Berkeley group has created a merged data set from 39,390 unique stations by combining 1.6 billion temperature reports from 15 preexisting data archives.

The Berkeley group has developed a new mathematical framework for producing maps and large-scale averages of temperature changes from a spatial network of weather station data. The new framework allows short and discontinuous temperature records to be included in the analysis making it possible to include data that in previous analyses were excluded. The Berkeley group has created a merged data set from 39,390 unique stations by combining 1.6 billion temperature reports from 15 preexisting data archives.

Mathematical treatment

The approaches used by the previous three analyses rely on spatial interpolation using a grid system.

- HadCRU divides the Earth into 5° x 5° latitude-longitude grid cells and associates the data from each station time series with a single cell.

- NASA GISS uses an 8000-element equal-area grid, and associates each station time series with multiple grid cells by defining the grid cell average as a distance-weighted function of temperatures at many nearby station locations.

- NOAA decomposes an estimated spatial covariance matrix into a collection of empirical modes of spatial variability on a 5° x 5° grid. These modes are then used to map station data onto the grid according to the degree of covariance expected between the weather at a station location and the weather at a grid cell center.

The Berkeley approach to spatial interpolation does not use gridded data sets at all, and avoids a variety of noise and bias that can be introduced by gridding.

In October 2011 the Berkeley group submitted four papers for peer review

- Berkeley Earth Temperature Averaging Process

- Influence of Urban Heating on the Global Temperature Land Average

- Earth Atmospheric Land Surface Temperature and Station Quality in the United States

- Decadal Variations in the Global Atmospheric Land Temperatures

The chart shows the decadal land-surface average temperature using a 10-year moving average of surface temperatures over land calculated by the BEST group compared to NASA GISS, NOAA, and HadCRU results. Anomalies are relative to the Jan 1950 – December 1979 mean. The grey band indicates 95% statistical and spatial uncertainty interval.

The chart shows the decadal land-surface average temperature using a 10-year moving average of surface temperatures over land calculated by the BEST group compared to NASA GISS, NOAA, and HadCRU results. Anomalies are relative to the Jan 1950 – December 1979 mean. The grey band indicates 95% statistical and spatial uncertainty interval.

Be the first to comment