In the US there are 9,200 electric generating units with

more than 1,000,000 megawatts of generating capacity, but most of them were

built in the 1960s or earlier. There are

over 12,000 sub-stations and the average age of substation transformers is over

40 years, beyond their expected life span. There are more than 300,000 miles of

transmission lines in the US and since 1982, peak demand for electricity has

exceeded transmission growth by almost 25% every year, but incredibly since

2000 only 668 miles of new interstate transmission lines have been built. The reliability of the grid is decreasing

while our dependence on it is increasing.

For example in 2008 chip

technologies consumed 40% of US power production and this is expected to

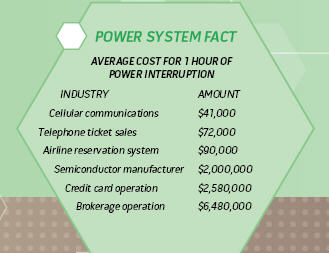

increase to 60% in 2015. Outages and

interruptions cost Americans at least $150 billion annually. As an example, a one hour outage can cost a brokerage operation over $6 million. The risks associated with our current,

increasingly fragile power grid and the impact of global climate change require

us to invest in a more resilient, efficient, and green smart grid.

A smart grid is a much more complicated animal than our current

A smart grid is a much more complicated animal than our current

grid. It involves price signals to

consumers, distributed generation, automated load management, a new

bidirectional communications network, storage, redundancy, and

self-healing. Managing

and operating the new smart grid is going to require a reliable digital model of the grid, based on accurate, up to date engineering

information. My experience with utilities and telecommunications firms is that the reliability of current network records/documentation databases is in

the range of 40 to 70%. As I have blogged several times over the past several years (here, here, here and here) the most common causes

of poor data quality are as-built backlogs, which typically range from months to years,

and restricted information flow between the records department and operations/field

staff, especially either no or severely limited flow of network facilities information from the field back to the records/network documentation department. The impacts of poor data quality are decreased productivity in operations, time consuming and expensive reporting, longer outages, an unhappy regulator and dissatisfied customers.

Around the world regulators are

becoming increasingly aware of the importance of data quality as we migrate our

power networks to a smart grid architecture. I was in Brazil recently and I learned that the national power utility regulator ANEEL has

promulgated guidelines that require power utility facilities database to

achieve 95% accuracy by 2010. To put

teeth in these guidelines, audits will be carried out periodically and based on

the results power utilities would face fines or see impacts to their rate structure. In Brazil this is a compelling event that

will require power utilities to invest in technology to optimize business

processes for data quality in order to achieve the goal of 95% reliability of

their digital network data. This regulation will put Brazil in a position to have one of the most reliable digital models of its network infrastructure in the world and provide the basis for a reliable digital model which is a prerequisite for a Brazilian smart grid. I understand that the Brazilian water regulator ANA has undertaken a similar initiative with respect to the quality of digital models of water utilities.

Many people believe

that it is simply a matter of time before regulators in other countries around

the world follow the Brazilian example and require power utilities and other utilities and telcos to achieve

the same or higher standard of data quality in their network facilities databases.

Be the first to comment