In August 2004, the NIST released a study that attempted to identify and estimate the efficiency losses in the U.S. capital facilities industry resulting from inadequate interoperability among computer-aided design, engineering, and software systems. This study includes design, engineering, facilities management and business processes software systems, and redundant paper records management across all facility life-cycle phases. For 2002 based on interviews and survey responses $15.8 billion in annual interoperability costs were quantified for the capital facilities industry in 2002. In addition to the quantified costs, respondents indicated that there are additional significant inefficiency and lost opportunity costs associated with interoperability problems that were beyond the scope of the analysis. Based on this the NIST team concluded that the estimated $15.8 billion cost found by the study was likely to be a conservative estimate.

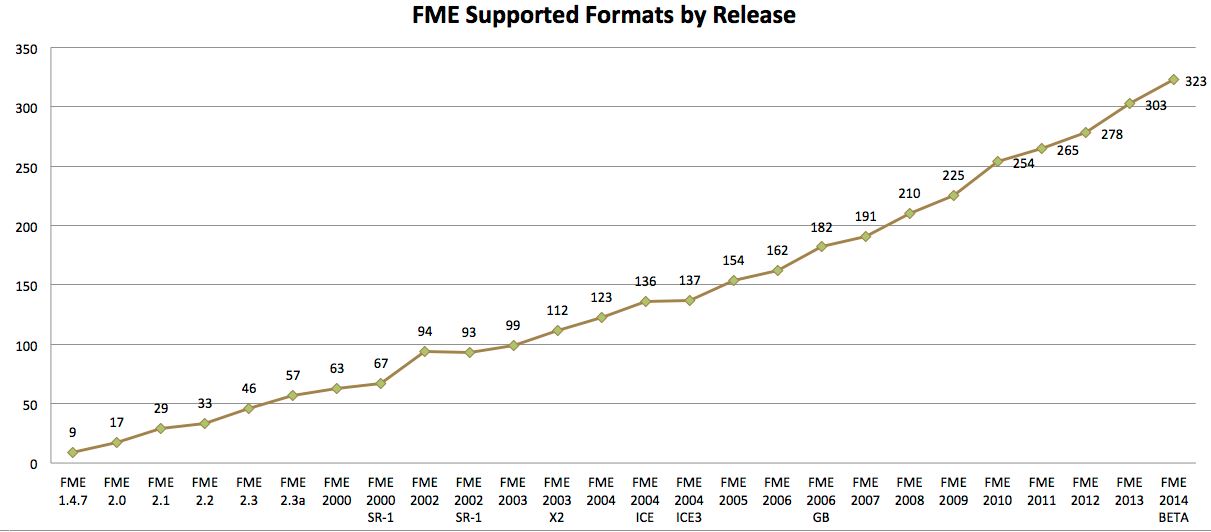

Arguably the geospatial industry has done more to address the issue of interoperability than any other major sector. One of reasons is that spatial data has always come from diverse sources. Even when a dominant player such as an ESRI or Google emerges, it has not been possible for that player to dictate a unified way of achieving interoperability. Safe Software is a major player in the interoperability industry and since the 1990s has maintained a graph showing the number of file formats that their FME translation engine has supported. The graph shows that over the years the number of new formats added each year has remained roughly constant with if anything a tendency to increase. In the future we may be talking more about “formats and systems” because SalesForce / Google Maps Engine / ArcGIS Online are really startpoint and endpoints, but there is no reason not to expect a similar growth pattern for systems. The rule of thumb is that new devices and new technologies require new file formats and APIs and there seems to be more new things every year.

Arguably the geospatial industry has done more to address the issue of interoperability than any other major sector. One of reasons is that spatial data has always come from diverse sources. Even when a dominant player such as an ESRI or Google emerges, it has not been possible for that player to dictate a unified way of achieving interoperability. Safe Software is a major player in the interoperability industry and since the 1990s has maintained a graph showing the number of file formats that their FME translation engine has supported. The graph shows that over the years the number of new formats added each year has remained roughly constant with if anything a tendency to increase. In the future we may be talking more about “formats and systems” because SalesForce / Google Maps Engine / ArcGIS Online are really startpoint and endpoints, but there is no reason not to expect a similar growth pattern for systems. The rule of thumb is that new devices and new technologies require new file formats and APIs and there seems to be more new things every year.

The way the geospatial sector has addressed interoperability is first of all through standards. Standards from the Open Geospatial Consortium (OGC) have been widely adopted by governments. Web services for exchanging spatial data such as WMS (web mapping service), WFS (web feature service), GML (geography markup language), and others are almost universally supported by government mapping agencies.

The way the geospatial sector has addressed interoperability is first of all through standards. Standards from the Open Geospatial Consortium (OGC) have been widely adopted by governments. Web services for exchanging spatial data such as WMS (web mapping service), WFS (web feature service), GML (geography markup language), and others are almost universally supported by government mapping agencies.

The 2005 NASA Geospatial Interoperability Return on Investment Study concluded that projects that adopted and implemented geospatial interoperability standards saved 26.2% compared to projects that relied upon a proprietary standard. In other words for every $4.00 spent on projects based on proprietary platforms, the same value could be achieved with $3.00 if the project were based on open standards.

LandXML, Multispeak, and GeoJSON are examples of standards involving geospatial data that are not associated with a major standards body like the OGC, W3C or IETF, but are non-proprietary open standards that have been widely adopted.

Another vehicle for interoperability are geospatial translation engines like FME that not only provide a tool for converting one file format to another, but also provide a server for publishing data stored in 320-odd formats and systems. This is a major step forward, because it means that making copies (in another format) of data can be avoided. As a rule of thumb, once you make a copy of a spatial dataset, you’ve created a problem.

And finally there are de facto standards like ESRI shape, Autodesk DXF, and others. Google’s KML (originally Keyhole Markup Language) is a unique example of a de factor standard that became an official OGC standard – through the foresight of Google and the OGC.

Be the first to comment