DigitalGlobe owns and operates a constellation of commercial earth observation satellites. The latest is Worldview-3 which is capable of about 30 cm precision. Digital Globe’s satellites collect 3,000,000 square kilometers of Earth imagery every day, that’s about 70 terabytes of data per day. Digital Globe also provides online access to 15 years of earth imagery, about 90 petabytes. Of all high-resolution commercial imagery collected since 2010, Digital Globe has collected approximately 80% of it. Clearly as a user you can’t download all of this data, so how can users do things like search through this huge data set and generate a product such as a vegetation map for Tanzania ?

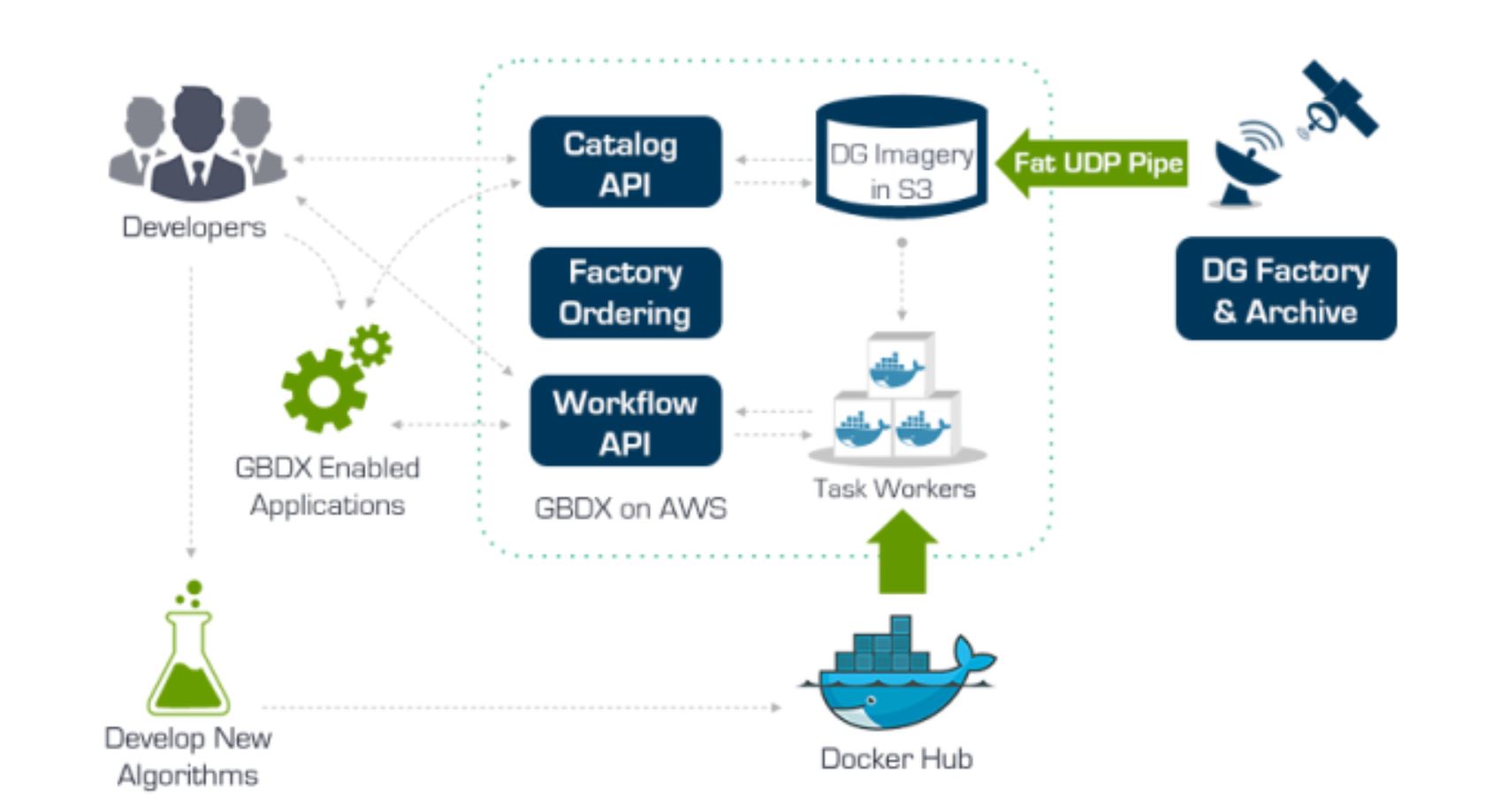

At the GeoBuiz 2016 Summit in Bethesda this week, Walter Scott, CTO and Founder, outlined how Digital Globe is making this vast amount of high resolution data accessible to and usable by customers. To make it possible for customers to search and process this data efficiently, Digital Globe has put all of the data on the public cloud (Amazon) and has provided a platform that supports efficient search, processing and workflows. GBDX is an online environment for efficiently running advanced algorithms for information extraction from imagery datasets at scale. It is a set of RESTful APIs, and algorithms that have already been implemented by Digital Globe or its partners include car counting, orthorectification, land use, land cover, and atmospheric compensation.

At the GeoBuiz 2016 Summit in Bethesda this week, Walter Scott, CTO and Founder, outlined how Digital Globe is making this vast amount of high resolution data accessible to and usable by customers. To make it possible for customers to search and process this data efficiently, Digital Globe has put all of the data on the public cloud (Amazon) and has provided a platform that supports efficient search, processing and workflows. GBDX is an online environment for efficiently running advanced algorithms for information extraction from imagery datasets at scale. It is a set of RESTful APIs, and algorithms that have already been implemented by Digital Globe or its partners include car counting, orthorectification, land use, land cover, and atmospheric compensation.

One of the most important efficiencies of the GBDX architecture is that it avoids having to move huge volumes of data over the internet. It does this by bringing the application to the data, rather than the data to the application. For example, to search through the data, GBDX provides a catalog API that uses 39 different image attributes such as geographic location, cloud cover percentage, sensor type, sun angle and others. The search runs locally on Amazon, avoiding pushing data over the internet.

The GBDX API includes support for workflows. A workflow is a sequence of tasks executed against sets of data. A typical workflow is initiated with a search for the data you want to process. The selected data is assigned to a working set, and then processed through a sequence of steps to generate your desired product. For example, a typical product would be an orthorectified vegetation map. Again the workflow runs locally on Amazon, avoiding pushing data over the internet during processing. Only the final product resulting from the workflow, such as a vegetation map, would be transferred over the internet to the customer.

GBDX uses the cloud infrastructure of Amazon Web Service (AWS). Data is stored in S3 ‘buckets’ that are accessed through GBDX RESTful web service APIs. GBDX includes built in tools supporting remote sensing algorithms from DigitalGlobe and ENVI. GBDX is python-friendly and allows you create your own tools.

One of the application areas that the platform makes possible is deep learning. The basic concept is that software can simulate the brain’s large array of neurons as an artificial “neural network”. Large volumes of input data and some way of quantitatively assessing the results are required to train the system. With the greater depth enabled by new computers and algorithms, remarkable advances in speech and image recognition are being achieved. For example, a Google deep-learning system that had been shown 10 million images from YouTube videos proved almost twice as good as any previous image recognition effort at identifying objects such as cats. Microsoft has demonstrated speech software that transcribes spoken words into English text with an error rate of only 7 percent.

But deep learning requires a lot of compute power and a lot of training. GBDX provides the computational power to apply deep learning to earth observation and Digital Globe’s data library can provide the training data sets. What is required is a scalable way of assessing and quantifying the results. Walter Scott suggested crowd sourcing as a way of achieving the scale required for earth observation training.

Be the first to comment