One of the scenarios that is becoming increasingly common especially in government at the national level is an IT solution for sharing data, often referred to as spatial data infrastructure or SDI. In

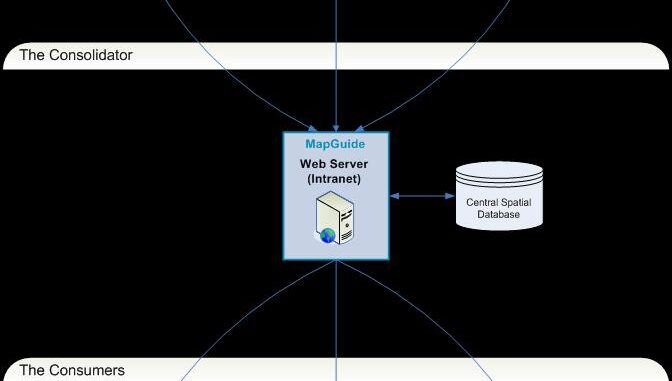

most organizations this is what I would call a Web 1.0 application where spatial data that is created somewhere within the government, for example in the national mapping agency, and is made available through a single portal to consumers of the data. Initially, the consumers are typically other government agencies, but over time the portal frequently evolves into a public portal. Often in addition to providing a description of the data and access to the data, the portal will also include metadata about the data. I’ve included a logical architecture (thanks to Bruce Argue for this), that illustrates what a typical SDI looks like logically. There are creators, who create data, a consolidator or portal which provides a gateway to and metadata about the data, and consumers, who use the data.

Challenge

Typically the creators or data providers have used applications from multiple vendors to develop their datasets, so that the consolidator needs to be able to consume data in Autodesk, Oracle Spatial, ESRI, MapInfo, Intergraph,and Bentley formats and then publish this data through the consolidator portal in a common standards-based way so that everyone in the organization is able to access the data.

Solutions

From an IT perspective the solution to this type of problem rests on two basic components, standards-based interoperability and a scalable architecture.

For spatial data, fortunately the Open Geospatial Consortium (OGC) has defined standards which are becoming widely adopted in government. The OGC’s open web standards WMS and WFS are the key to interoperability in a web environment. In the context of SDI this means that the consolidator portal needs to be able to publish WMS/WFS compliant data and consumers need to be able to consume WMS and WFS datasets. As I mentioned in a previous blog, most vendors of geospatial products support at least WMS, and increasingly WFS.

From an architectural perspective there are several solutions to problem of publishing datasets stored in different formats through a WMS/WFS portal.

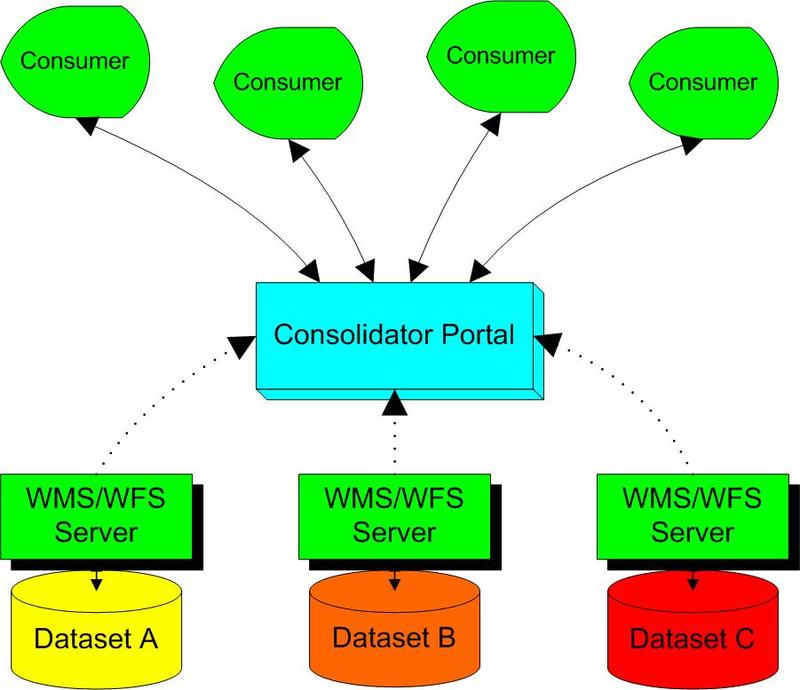

Clearinghouse

A common architecture is a clearinghouse where the portal simply includes

descriptions, metadata, and an URL for each dataset. In the past this

type of approach was common, but the URL would redirect you to a web

site where you could download the data in whatever format the data

creator supported. The advantage of a standards-based architecture as

illustrated here is that the data is accessible through a common

standards-based web services API.

An advantage of this approach is that the portal is relatively easy

to simple to implement because it does not publish data directly. In

addition it puts the onus on the data creator, rather than the

consolidator, to ensure that the data is accessible.

A disadvantage is that cross-dataset queries are limited to the

contents of the metadata, since to query the actual data you need to go

to the website of each dataset. Another potential disadvantage is that

the data creators may not all support the same versions of the WMS and

WFS standards so you may not find the same level of data access for all

datasets.

Spatial Warehouse

This is probably the most typical approach at the present time. Periodically data creators upload a![]()

copy of their current dataset to the consolidator who converts it and loads it into spatial data warehouse consisting of a spatially-enabled relational database management system (RDBMS). This process can automated so that the datasets are refreshed periodically. The frequency with which this occurs depends on the volatility and persishability of the data.

The primary advantage of this approach is that it has minimal impact on the operational processes of the data creators. Another advantage is that since the data is stored in a common spatially-enabled RDBMS, spatial SQL queries on each of the datasets is supported. Potentially cross-dataset queries can also be defined. In addition uploads can be timed to occur at off-peak times so that during normal business hours operational systems are not impacted by large uploads occurring at times of restricted network availability. For remote sites with poor network connections datasets can be provided to the central office on CDs.

This approach requires that RDBMS schemas for all of the datasets be defined. In general each dataset will require its own schema. You also have to identify applications such as FME for data conversion and write scripts for converting each dataset and loading it into the RDBMS.

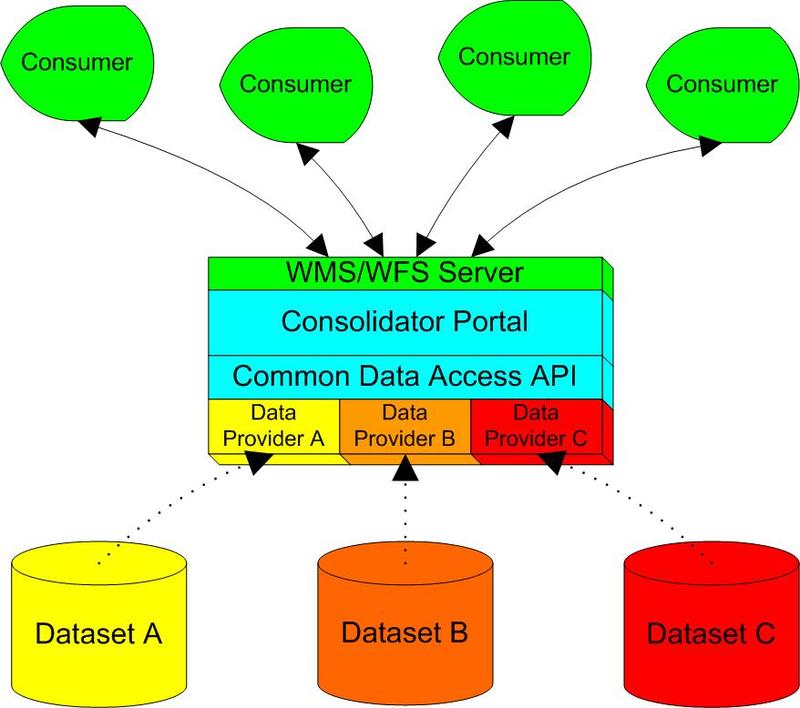

Distributed Databases

Another approach is based on an intelligent portal that provides a common data access API to all the

datesets. This approach requires data providers for the different types of spatial datasets such as ESRI shape, Autodesk DWG, and Oracle Spatial.

The advantages of this approach is that each dataset is current and accessible through a common programmable interface, though you should bear in mind that in general each will have its own data model and the portal needs to reflect this. In this approach each dataset is individually queryable using the common data access API. Another potential advantage is that this approach also allows the portal to provide cross-dataset queries. A potential disadvantage is that in situations of limited network

capacity, for example in the case of a low bandwidth WAN, there may

simply not be enough available capacity, so that performance levels

become unacceptable.

But with the future in mind one of the most exciting things about this approach is that it supports Web 2.0 interactivity, in other words, users can edit the data.

Be the first to comment