As the planet’s challenges intensify, human innovation rises to meet them.

However, unlocking timely and actionable insights from the vast volumes of Earth observation data demands more than traditional GIS platforms, which often face limitations in performance, scalability, and usability.

To tackle global challenges at a planetary scale, the geospatial community requires next-generation processing power, seamless access to diverse datasets, and intelligent automation that enables real-time collaboration and analysis.

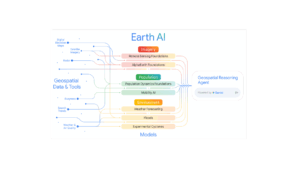

Google Earth AI represents a major step toward that vision. By integrating robust geospatial foundation models with a Geospatial Reasoning Agent built on Google’s latest Gemini models, it becomes possible to perform complex, real-world reasoning across imagery, environmental, and population data; unlocking insights about our planet with unprecedented speed and intelligence.

Inside Earth AI: Powerful foundation models

Earth AI models are trained on specialized geospatial datasets spanning three categories of Earth data to analyze distinct aspects of our planet. Hence there are three types foundation models present.

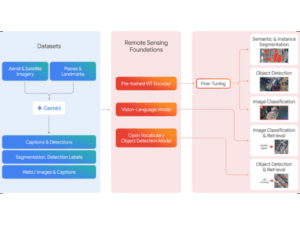

Remote Sensing (Imagery) models: The Imagery Foundation model tackles key challenges in earth observation, such as handling datasets with missing labels and distinct image distributions, opening new opportunities for visual analysis. This model serves as a framework for scalable, versatile remote sensing analysis, connecting progress in general computer vision with the specific requirements of geospatial data.

Remote Sensing Foundations models inherently support natural language queries, allowing users without specialized expertise to quickly analyze images containing objects or events.

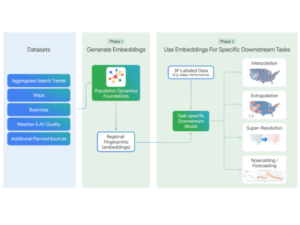

Population models: The Population Dynamics Foundations model synthesizes multiple datasets to characterize human behavioral patterns within a spatial context. It represents the built environment using map data, the natural environment through weather and air quality information, and human behavior via Search Trends and anonymized busyness metrics.

Environment – Weather & Climate models : – The Environment models and APIs deliver advanced insights into weather, climate, air quality, and natural hazards, making complex geospatial information broadly accessible. This evaluation integrates three key environmental signals:

- Weather Forecasting: Powered by machine learning models, the Google Maps Platform Weather API provides hourly forecasts up to 240 hours and daily forecasts up to 10 days for parameters such as temperature, precipitation, wind, and UV index.

- Flood Forecasting: The Google Flood Forecasting API offers real-time riverine flood predictions using gauge data, detailing expected inundation areas, severity levels, and probabilities.

- Experimental Cyclone Forecasting: Google’s AI-based cyclone model, built on stochastic neural networks, predicts cyclone formation, track, intensity, size, and shape by generating 50 possible scenarios.

When AI learns to think Geospatially

Although each Earth AI model provides a strong insight into a particular area, gaining a complete understanding of our planet necessitates using several domains at once. Any individual model, whether it concentrates on images, human activities, or climate; has inherent limitations due to its narrow focus. That’s where Google’s new Gemini-powered Geospatial Reasoning Agent comes in.

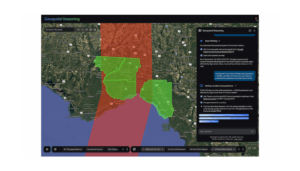

Built on Earth AI’s foundation models, the agent interprets natural language questions and orchestrates multiple analytical steps to deliver precise geospatial insights. It acts like a conductor, coordinating “expert” sub-agents trained to handle everything from satellite imagery and environmental data to population and infrastructure statistics.

For example, if a user asks,

“Which communities are most vulnerable to an approaching hurricane?”

the agent begins by using the Environment Model to map areas at risk of hurricane-force winds. It then pulls population data from Data Commons, overlays county boundaries from BigQuery, and runs a spatial analysis to pinpoint vulnerable zones. Using Population Dynamics Foundations, it identifies at-risk postal codes and applies the Remote Sensing Foundations model to locate critical infrastructure like hospitals and power lines.

By combining reasoning with automation, the Geospatial Reasoning Agent turns days of manual analysis into moments—bringing Earth-scale intelligence closer to real-time action.

Limitations and Future works

While Google Earth AI marks a breakthrough in Earth-scale intelligence, several challenges persist. Current geospatial reasoning agents excel in structured domains like environmental forecasting and disaster response but struggle with unstructured or out-of-distribution queries. Data inconsistencies limited regional coverage, and variations in satellite resolution can affect the accuracy and scalability of insights. Moreover, existing evaluation methods rely heavily on automated rubrics, lacking extensive human expert validation.

Future work will focus on expanding reasoning capabilities across broader geospatial domains, including agriculture, biodiversity, and urban planning. Integrating Gemini-powered models with Earth Engine, Data Commons, and AI-based simulation tools will help build adaptive systems capable of handling complex spatial-temporal dynamics. Developing stronger evaluation frameworks with expert review, diverse benchmarks, and real-world testing will be essential. The ultimate goal is to create Earth AI systems that are accurate, transparent, and resilient in addressing global environmental and societal challenges.

Be the first to comment