Automated feature extraction from satellite imagery has made major progress in the last year. Accurate building footprints extracted from high resolution satellite imagery are available from companies such as Ecopia (which has just announced a partnership with DigitalGlobe). Also NVIDIA has demonstrated the ability to automate detection of many road networks using sophisticated algorithms and multi-spectral high resolution imagery.

Building footprints

SpaceNet is a repository commercial satellite imagery and labeled training data being made available through Amazon Web Services at no cost to the public to foster innovation in the development of computer vision algorithms to automatically extract information from remote sensing data. It is the result of a collaboration between DigitalGlobe, CosmiQ Works, and NVIDIA. Two competitions for automated building footprint extraction from high resolution satellite imagery and a third for road network extraction have been held based on SpaceNet data.

The first SpaceNet Challenge was launched in November 2016. Imagery of Rio de Janeiro from the WorldView 2 satellite at 50cm resolution using eight spectral bands was made publicly available on Amazon Web Services (AWS). 42 developers competed to create algorithms that extract building footprints from the imagery. The source code of the winning algorithms was made available on the SpaceNetChallenge GitHub repository.

The winning implementation was developed by Brazilian developer using an implementation based on random forests with brute force polygon search and did not leverage deep learning frameworks. The organizers’ conclusion based on the results of Round 1 was that automated building footprint extraction remained a challenge.

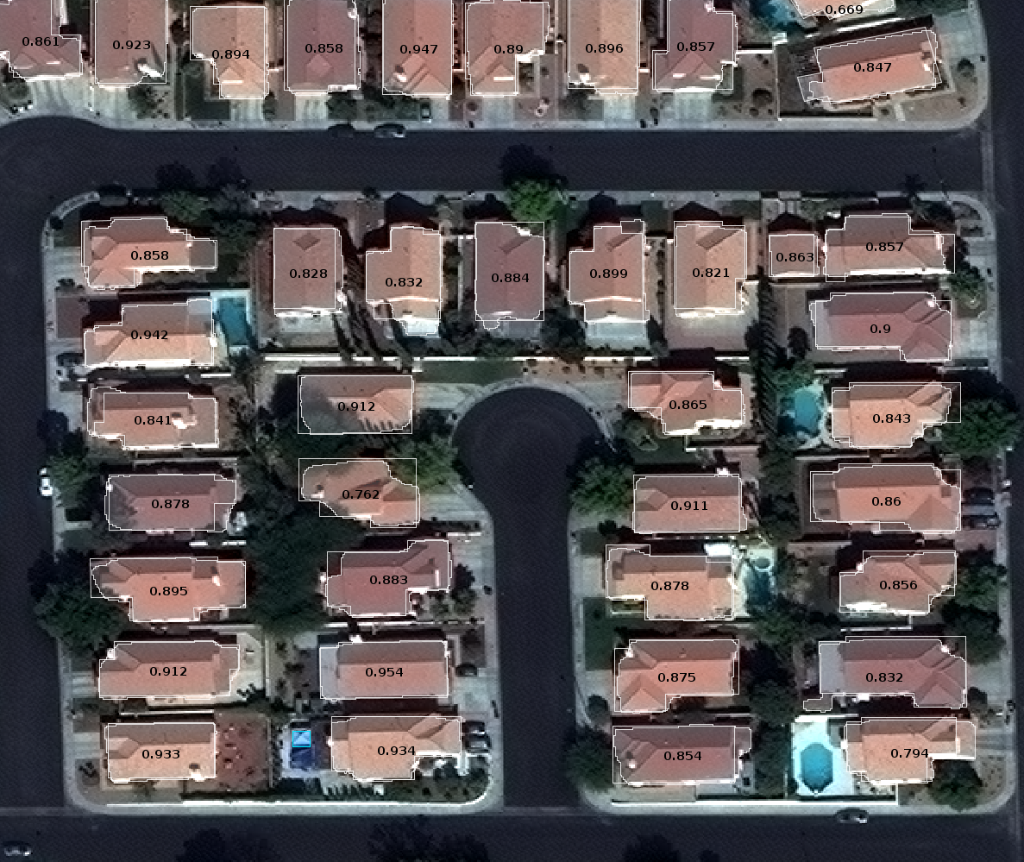

Round 2 of the SpaceNet challenge involved higher resolution 30 cm imagery from WorldView 3 for four cities across the globe. The competition challenged developers to improve performance from the first competition using the higher-resolution imagery and more geographically diverse training data samples. SpaceNet on AWS held imagery and building footprints for Las Vegas, Paris, Shanghai, and Khartoum with over 180,000 buildings181,619 footprints. In addition additional bands were made available (panchromatic, 3-band pan-sharpened, and 8-band pan-sharpened).

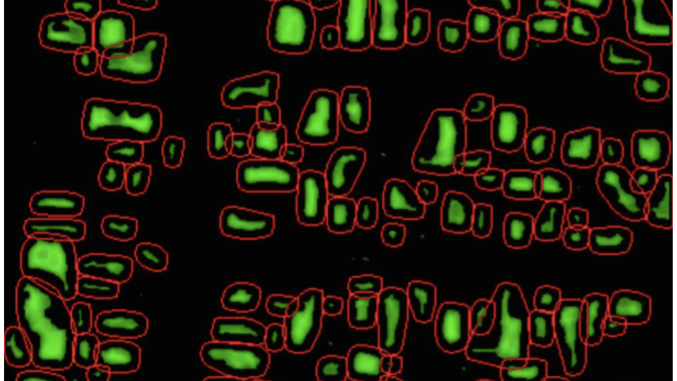

The winner of Round 2 applied a deep neural network model developed originally for medical image segmentation called U-Net. Successful training of deep networks requires many thousand annotated training samples. Training was aided significantly by augmenting the imagery with OpenStreetMap data. The winning solution used OpenStreetMap layers and Worldview-2 multispectral layers as the input of the deep neural network algorithm. In Las Vegas, the winning solution was outstanding in outlining suburban homes, but also did well in identifying odd-shaped buildings. In Khartoum, the solution was very good in identifying the footprints of stand-alone apartment complexes, but had trouble with small buildings, with building footprints that are close together, and with horizontally large buildings. One of the problems was inconsistent ground truth which affected training. The solutions offered by the developers in the competition were sufficiently successful that the organizers concluded that the winning algorithms achieved performance with potential for automated mapping tasks such as keeping maps up-to-date and to assist first responders during natural disasters. The source code for the winning implementations can be found in the SpaceNetChallenge GitHub repository.

DigitalGlobe has just announced a partnership with Ecopia, which has established an automated process to create building footprints quickly and at scale by leveraging DigitalGlobe’s Geospatial Big Data platform (GBDX) and advanced machine learning in combination with DigitalGlobe’s imagery library. The two companies plan to automatically extract accurate 2D building footprints globally, then refresh the datasets periodically to find and track changes over time. These datasets would be valuable to municipal governments for permitting purposes and for first responders after disasters. Ecopia is developing a database for all of Australia (7.6 million square kilometers) which will include every building with a roof area greater than 9 square meters. The database will contain building footprints for about 15 million buildings. The database includes linkages to other datasets including geocoded address, property data and administrative boundaries. Ecopia intend to update the dataset regularly to be able to track changes, especially urban sprawl.

Road networks

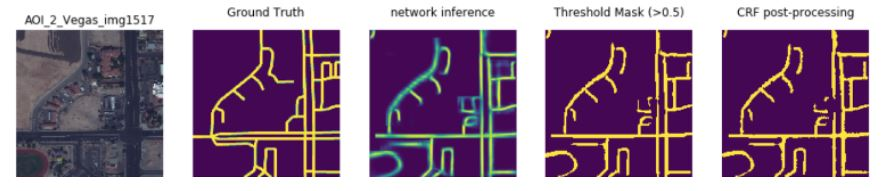

In November 2017 CosmiQ Works, Radiant Solutions, and NVIDIA announced a third round competition, “Road Detection and Routing Challenge” to explore automated methods for extracting map-ready road networks and routing information from high-resolution satellite imagery.

NVIDIA has released the results of several deep learning algorithms that illustrate just how difficult identifying road networks is and the sophistication of the available tools. These include using multi-band spectral imagery to identify the material properties of the road surface itself (asphalt, gravel, packed earth) and using more sophisticated processing including conditional random fields (CRF), percolation theory, and reinforcement learning.

NVIDIA utilized high performance GPU compute resources provided by the NVIDIA GPU Cloud and Amazon Web Services.

Be the first to comment