This article is the first part of a series about accuracy and precision, which briefly covers common factors that affect the quality of dataset during data acquisition process. Two more articles, particularly about GIS data types and attribute data structures, and limitations of data visualization methods, will be published later.

When we are working on any geospatial project, there are always some checkpoints waiting for us to be completed during a project lifecycle. First, it is important to ensure that there is appropriate data available to analyze. On the other hand, how the analysts handle different types of datasets is also an important factor to deliver meaningful result. Moreover, the selection of data visualization method will affect how target users or general public understand such result. All these mentioned stages require the same prerequisite of setting up an accuracy and precision requirement. The defined requirement will determine the quality and outcome of an GIS project.

Targeting the appropriate data

Geospatial data, particularly location information, allows us to understand how physical environment, built-up areas and human activities are interrelated. Such data can be used by businesses, governments, academic sectors and the general public to facilitate socio-economic developments. Applications such as location-based services, essential government services, and land boundary establishment always require exact information to maintain high quality database that is identical to the real world.

Lots of misrepresentation in different geospatial projects are caused by various types of error, including inaccuracy and imprecision of data sources. A dataset should be well collected and maintained in order to generate meaningful results. The quality of data is not easy to be assessed since data does not have physical characteristics that can be evaluated, therefore it is more important to eliminate error during data acquisition. Error represents the level of imprecision and inaccuracy of data. It is important to understand and eliminates error as prerequisite to maintain data quality.

Alike geographical phenomena, data quality can be differentiated in space, time, and theme. For each of these dimensions, several components of quality (including accuracy and precision) can be identified.

(Veregin, 2005)

Accuracy vs precision

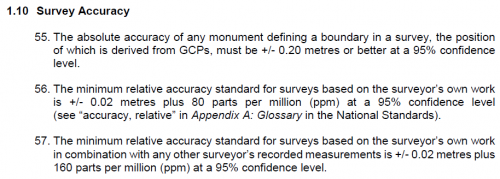

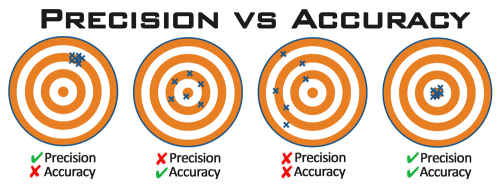

Accuracy represents the differences between an actual value of a real-world phenomenon and its encoded attribute which specifies properties of that event. It is used to define how the measurement differs from the actual value. Highly accurate data is often costly in terms of time and price, therefore the requirement of accuracy may vary for different projects. Natural Resources Canada has implemented the National Standards for the Survey of Canada, with accuracy level suggestions to meet different survey requirements.

Source: National Standards for the Survey of Canada Lands, NRCAN

Precision corresponds to levels of details about data. A precise attribute can describe features in large scale with great details. It is defined as resolution in some situations, particularly in the field of remote sensing, in terms of pixel size and sampling rate. When there are two datasets that have almost the same accuracy level and different spatial resolution, the model with lower resolution will always deliver more spatial error when compared with the one which has a higher resolution. In other words, when we zoom in on a map to look for greater details, we will also enlarge the error, it is because the accuracy level is lowered at the same time.

Photo courtesy: www.precisionrifleblog.com

Although we all understand that accuracy and precision are essential factors to deliver qualitative geospatial solutions, it is unattainable to include some of them in every project due to limited budget, environmental factor or human mistake. Inaccuracy always exists by knowing the fact that no data collection method and measurement system are infinitely precise. After understanding different types of error, users will have a clearer objective to build a customized error model.

No matter what kind of location data is needed and how that data will be used, fieldwork is always required to keep the information up-to-date. Error will always be involved in different parts of the data acquisition stages, some of it can be calculated and corrected afterwards, while the rest of it should be avoided during the data acquisition process.

Different types of error

There are lots of errors associated within the data collection stage of a project, however some of them have been addressed more often than the others.

Measurement error

Spatial accuracy represents the correctness of the observed data in terms of location. The differences between encoded location and ‘actual’ location can be measured in x, y and z dimensions.

When using survey instruments to record location information, such data is often averaged by statistical measures, particularly root mean square error. In conventional land survey practice, ground control points are created and measured by total station, level and GNSS receiver. A bad observation set may appear when some of the data lies beyond the confidence level, such that error propagation will appear as a possible result at the later stage. Positional error can be caused by environmental or systematic error. Fortunately most of them can be corrected afterwards by finding out the parameters.

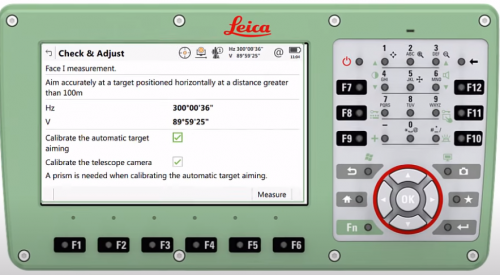

On the other hand, the systematic error is often caused by instrumental defects. A small measurement deviation, which is often caused by collimation error of the level or EDM, could accumulate and become a larger error in a traverse survey. Such phenomenon is also known as error propagation. It is important to balance foresight and backsight observations, and always maintain a longer backsight to eliminate instrumental error

Moreover, all survey equipment requires regular calibration to maintain measurement accuracy and reliability. For instance, two-peg test is a simple and effective way to check if a level requires service or not. A calibration baseline can be used to calibrate a EDM and update instrument errors of the total station. Last but not least, a GNSS Validation Network can be used for GNSS antenna calibration.

A EDM is required to preform regular service at a calibration base line

Photo courtesy: Leica Geosystem AG

Temporal error

There are two major components that cause temporal error to happen, including an outdated dataset and incorrect time range. It is important to ensure that information in the database is up to date such that these attributes can be used to describe the reality accurately. An outdated dataset is one of the most common problems, especially when there is limited workforce or the surveyed area is very large. Human settlements and the surrounding areas are continually changing and expanding, urging local authorities to update the geocode database regularly. For instance, a local township government may not have the resources to update the inventory list of street furniture every year. Some of them could be damaged due to traffic accidents or snow storms. As a result, the decision maker is not able to summarize how many street signs or barriers should be replaced if they have an outdated dataset.

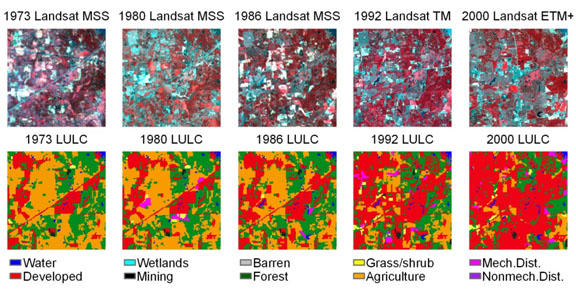

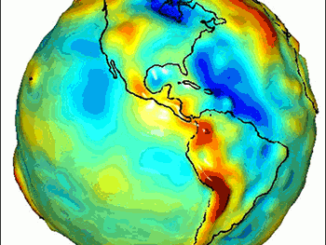

On the other hand, when a user is working on a 4D GIS project with time values, such temporal dimensions should match the time stamp from the target database. In this case time values from the attribute do not necessarily need to be up-to-date. A very popular GIS application with temporal coordinates is the land cover change detection. Landsat imagery is used to determine land use and land cover types. The USGS conducted a research project, named Land Cover Trends, to understand the rate, causes, and trends regarding the land use and land cover change in the U.S. from 1999 to 2011. Getting images from irrelevant timestamp will not generate meaningful result in this case.

Time is an important variable when studying land cover trends.

Photo courtesy: USGS

Classification error

Classification error is another type of inaccuracy that often occurred during field observation or data entry, which a surveyed item is improperly categorized. The non-spatial attribute does not have spatial correlation and it can either be an inappropriate categorization or an incorrect response from surveyed respondent. Misclassification can be caused by confusing or similar features. For instance, street signs are an essential sign to provide location information, however the road naming policy may make the content less distinguishable in some situations. One of the example is from the neighborhood of Glastonbury in Edmonton. Three nearby streets are named after Glenwright and the street signs look identical. It may cause confusion if someone is not familiar to that area.

Photo courtesy: Google Street View

On the other hand, population coverage error can occur during any census survey caused by an incomplete list of dwellings and respondent error. Statistics Canada has conducted analysis to further understand how it can occur.

Coverage errors may also be committed during the processing stage at any point where records for persons or households are added to or removed from the census database. Records can be deleted by mistake. Questionnaires may be linked to the wrong record or returned too late to be included.

(Statcan, 2011)

It is usually caused by outdated information from the database. For instance, people may move out before Census Day or move in after Census Day, such that the dwelling is misclassified as occupied or unoccupied.

Data Standardization

The Canadian Geospatial Data Infrastructure (CGDI) has developed Geospatial Standards and Operational Policies (The policies) to standardize the practices of data acquisition, digitization, conversion, visualization, etc. The standard encourages users to identify geospatial data by encoding metadata and promo effective and efficient data integration among different organizations. Information about location and features of an object can be stored and read in different systems with the same universal data format.

By implying geospatial standards to everyday works, different GIS users can share and convert location-based information without barriers. It can eliminate misunderstanding caused by converting an uncommon data format or combining rows and fields in attribute tables. When there are guidelines for geospatial technicians to follow, the duration and challenge of data acquisition process can be predicted in advance.

Maintaining a reasonable accuracy and precision level is the prerequisite to produce meaningful results that can properly represent the reality. Solutions, including standardization, are always available to identify every fault in a GIS project throughout its life cycle. Moreover, the policies cover much more than data standardization and accuracy. It also mentions data access and visualization of data. The stage of data processing and visualization, however, involve more complex procedures that could also create error. These topics will be covered in the upcoming chapters.

Further reading

https://www.nrcan.gc.ca/maps-tools-publications/maps/100-years-geodetic-surveys-canada/9110

https://www.nrcan.gc.ca/earth-sciences/geomatics/canadas-spatial-data-infrastructure/8902

https://clss.nrcan-rncan.gc.ca/clss/surveystandards-normesdarpentage/

https://www.cbc.ca/news/canada/edmonton/edmonton-city-streets-naming-1.5685398

https://www12.statcan.gc.ca/census-recensement/2011/ref/guides/98-303-x/ch3-eng.cfm

https://www.nrcan.gc.ca/earth-sciences/geomatics/canadas-spatial-data-infrastructure/8902

Be the first to comment