Precision must not be compromised…

The allure of automation: entering prompts into your favourite bot, to reduce your workload and streamline tasks. The results can astound. But… Is this leading to a tacit acceptance of “good enough”? Are users simply seeking shortcuts? Are companies adopting these tools with the goal of cutting payroll? Or are such tools being applied responsibly, effectively, leveraging the essentials human elements, and to their fullest potential?

“Precision must not be compromised” was a key statement from Nicholas Cumins, Chief Executive Officer of Bentley Systems, at a press briefing during the Year in Infrastructure (YII 2025) awards event in November of 2025. This line hit home, especially amid the ongoing discussions around AI and automation for the infrastructure sector.

You might tire of hearing me, and others say: further automation is an imperative. The global infrastructure gaps will never be closed using legacy tools and methods. So, it is heartening to see substantive progress over the past decade in the development of proven, effective, and responsible automation. Bentley Systems is the host for the YII awards, that showcase not only their own solutions, but real-world implementation and innovation among the finalists and award winners. This is why it is one of my favourite events to attend; a snapshot of the state of automation in infrastructure lifecycles: planning, reality capture, design, construction, digital twinning, and operations. While Bentley is a prominent leader in these matters, I’ll highlight some others, later in this article.

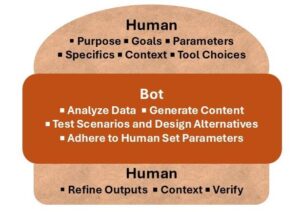

The Sandwich

Cummins related a recent conversation he had with the CTO of a major engineering firm, who brought up the theme of “the machine in the middle”, and the much discussed “AI sandwich”.

“What is the machine in the middle?” said Cummins. “It means AI is in the middle of the process, but it starts with a human, and it ends with a human. Because you know the potential impact the consequences of bad design when it comes to infrastructure. So, no way will engineering services firms, anytime soon, rely on AI to do all the work. At the end of the day, they stand behind the engineering design that they have created, they stand behind the models, they stand behind the drawings. They literally stand up those drawings and engage their reliability.”

Look up the concept of the “AI sandwich” and you’ll see many characterizations. However, they all seem to include the following:

- The human at the top: Establishing goals, providing the design rules and parameters, choosing the tools, providing context, and asking specific questions.

- The bot in the middle: Analyses data, generates content, tests scenarios and design alternatives. But strictly within the bounds set by the human, acting as a “cobot”.

- The human on the bottom: Refines the output. Adds context, implements checks, and verifies—it is the human engineer that will need stand behind the plans.

Cummins reiterated that full reliance on the bots will not happen anytime soon. “Let’s make sure that when [AI] makes recommendations, that those are trustworthy and based on sound engineering, logic, and physical principles. Trust with AI is really these two components: the collaboration with the engineer, and then the necessary engineering context that is coming from our application.” It is a matter of deftly finding a measured (no pun intended) balance in cobot symbiosis.

Cummins reiterated that full reliance on the bots will not happen anytime soon. “Let’s make sure that when [AI] makes recommendations, that those are trustworthy and based on sound engineering, logic, and physical principles. Trust with AI is really these two components: the collaboration with the engineer, and then the necessary engineering context that is coming from our application.” It is a matter of deftly finding a measured (no pun intended) balance in cobot symbiosis.

Cummins noted an award winning entry from the YII event in Singapore a few years ago that automated structural computations. Hyundai Engineering used the API for Bentley’s STAAD (structural engineering software) to port results into their own database and AI analysis workflow, creating over 1,600 design scenarios and generating 27 million prediction models for their projects.

Conversations with Bots

At the YII event there was the iTwin Lab, interactive exhibit. A highlight for me (as a geo-nerd) was the OpenSite+ demonstration. It is a design software that employs a copilot- style approach: a conversational interface where the engineer poses and refines design asks. And the software presents iterative design alternatives until the desired results can be checked and verified.

“The scope and depth of modeling are getting bigger, and workflows are increasingly more data-centric,” says Ian Rosam, Product Management Director for Bentley’s Civil Engineering Applications. “Efficiency through automation underpins everything we’re doing across our open applications.” Per materials on their range of design software (where applied), ‘AI is not treated as an add-on per se but is embedded with large language models (LLMs) trained on engineering-specific knowledge, like building codes and environmental rules.’

“Consider hydraulic design and analysis features in OpenSite+,” says Rrosam. “Previously, engineers would manually extract data, apply formulas, and validate computational steps. Now, they can use natural language prompts—typed or spoken—to execute complex calculations that the AI contextually understands. It’s a little bit like approaching an exam situation. ‘Do I have to remember the formulas, or can I go in with an open book?’ With OpenSite+, the LLM is trained on “Heastad Methods” for hydraulic modeling and can be taught more. This allows it to carry out the computations, though you still need to understand the application of the theoretical and the output.”

“Consider hydraulic design and analysis features in OpenSite+,” says Rrosam. “Previously, engineers would manually extract data, apply formulas, and validate computational steps. Now, they can use natural language prompts—typed or spoken—to execute complex calculations that the AI contextually understands. It’s a little bit like approaching an exam situation. ‘Do I have to remember the formulas, or can I go in with an open book?’ With OpenSite+, the LLM is trained on “Heastad Methods” for hydraulic modeling and can be taught more. This allows it to carry out the computations, though you still need to understand the application of the theoretical and the output.”

The demonstration, of an example site, where a potion needs to be optimized for parking while meeting drainage targets, took minutes. Whereas legacy manual methods may have taken days. I should note that the operator tasking the bot needs to have the fundamental engineering knowledge and experience to converse effectively. Inexperienced questions will yield questionable results. I liken this to the exchange with the mega-computer “Deep Thought” in the “Hitchhiker’s Guide to the Galaxy”. The answer given to “life, the universe, and everything” was “42”, with added the qualifier: “you cannot understand the answer without first knowing what the question is”.

Implementation Across the Sector

There’s fine examples, large and small, across the infrastructure, geospatial, and geomatics sectors. Most certainly in reality capture, where bottlenecks form that automation is helping reduce. This issue is that mass data capture has become so rapid, easy to perform, and inexpensive (per data unit), that the sheer volume outpaces a user’s capacity and resources process it. And there’s an irony in that realty capture solutions seek to mimic elements of human perception.

While machine learning has been employed for decades, for instance for point cloud classification, the more recent adoption of neural processing is proving to be a way past legacy bottlenecks.

Using bots to extract linework from aerial photos, for example, for producing drawings for land title surveys, is being provided as a service by firms like AirWorks and Aerotas. Both firms note that there should still be some amount human intervention, QA/QC, and examining features that the bot has flagged as uncertain.

With neural processing, innovation in computer vision (CV) for geospatial applications is booming. This is seen (no pun intended) in tools for field data capture and construction layout automation, like the example of the iCON Trades system that potentially could upend how traditional total stations work. And a combination of powerful edge computing, neural processing, CV, and feature recognition databases, brings an exciting new proximity awareness solution (Xsight360) for mining and construction for site safety.

Powerful CV implemented as a cobot for field capture, with downstream processing automation (in the cloud) was the magic sauce in the Looq system that has continued to make waves in the reality capture space in recent years. Not only did it challenge the “laser-scan-everything“ conventional wisdom, but highlights the key role of the human in cobot symbiosis.

The founders of Looq had originally toyed with the idea of a literal mobile bot laden with cameras and capturing a site autonomously. But they soon realized that a bot could not made the judgements of how to best navigate complex sites, and what to capture. The post-processing is done in the cloud: point cloud classification, and elements of feature recognition. The Looq team has learned from their early customers and continue to add functionality. For example, adding heads-up drafting tools so the customer, using their skills and experience, can refine the end product.

Asset inventory and management are benefiting from advances in neural processing and CV. For example, Blyncsy (acquired by Bentley) that can interrogate massive numbers of images rapidly, to flag features. But also, and this is where it can really shine, is to identify changes in successive image sets. They have among their customers, many state departments of transportation (DOTs). One example is a DOT that put inexpensive cameras on fleet vehicles. Daily images from these vehicles, going about their normal operations, are processed overnight, and Blyncsy identifies changes as “events”, flagged for further examination. Asset status and road conditions can be updated daily.

“That’s the trustworthy AI aspect that we’re trying to move onto the table,” said Mark Pittman, Blyncsy CEO, Senior Director of Transportation AI. “It is not like it’s doing the entire triage job for you, but it’s flagging the things that you need to review as an engineer to say, ‘Does this matter? How do I respond? What are my recommendations? What is the next step?’ Sifting through that data from a manual process perspective would be impractical and cost prohibitive. It should be done with, a kind of build-your-own LLM, and neural processing.”

“You can get all sorts of off-the-shelf AI tools today that will identify, detect a sign for you, telling you if that sign is a roadway sign or a billboard sign or a regulatory sign or an advisory sign,” Pittman. “ But considering the entire MUTCD (Manual on Uniform Traffic Control Devices for Streets and Highways), it is a very challenging task, and that library is constantly evolving. We see ourselves as having a very specialized transportation and engineering focus. We’ve built a specific AI tool for that, and we’ve been very transparent about this.”

“If you go to [our site] there’s an explore maps button,” said Pittman. “We published the entire U.S. freeway network, guardrail layer. We published speed limit signs for the entire US freeway network. We published what work zones looked like, say in October of last year on that. We’ve done this for the UK, we’ve done this for Canada. We’ve done this for Australia, and New Zealand. We just put the data out there, because what we find is the federal government may not actually know where, or how many miles of guardrail there are across the country, but we’ve identified this and now made it available, because now we’re all speaking the same language. Now we’re all starting with a core piece of digital infrastructure that we can build from and say, great, we’ve got [tens of thousands] of miles guardrail in the US. So, if we’re someday going to have to replace that with a new standard for guardrail, because autonomous vehicles and electric vehicles have a higher wheel base, and are heavy, and they blow through them. Then how much is it going to cost? Now we have a number, because we actually know what our inventory is.”

LLM, LSM and LGM

The aspect of the current AI boom, that the general public is most familiar with, taps large language models (LLM). Whether you are asking for help outlining a report, summarizing meeting notes, or making goofy graphics for fun, LLMs are powerful.

However, the nature of geospatial data carries aspects that many in the geospatial disciplines feel that the common LLMs lack. For one, if a subject is somewhat esoteric (as many geospatial elements are) and there is limited LLM ‘meat’, then some bots will just make guesses—results can be comical.

The copilot-style example we looked at earlier with OpenSite+ is an example of a context-aware assistant tailored to the engineering disciplines and infrastructure sectors. As the product site notes: It relies on a large language model (LLM) that understands what engineers want to do and then leans on multiple AI agents trained on discipline-specific knowledge to do the actual work.

But what if these large models were even more spatially focused? Imagine tapping a rich digital twin of a city or site when you enter into the planning and design phases. There’s a lot of conversation, and R&D, into what many call large spatial models (LSM) or large geospatial models (LGM).

Partick Cozzi, founder of Cesium, a spatial foundation platform, is now the Chief Product Officer at Bentley. He is quite excited to bring the two worlds together, to tap spatial richness for analysis. “I’m really excited by iTwin Capture,” said Cozzi. “Now I can join the capture with the underlying reality services. From photos to say, Gaussian [splats].” Splats add more than just richness for visualizations; it can help refine what it being seen. For example, under different light conditions where certain objects (rails, cables, fencing, semi-transparent and transparent materials, etc) could be missed or misidentified. “And AI feature detectors applied to point clouds. So, all these reality services, they’re now available as Cesium services. The seasoned developer community has, super easy to use APIs, and drag and drop interfaces.”

Why

We need to become more efficient, but without compromising precision and quality. To proceed otherwise, especially when it comes to infrastructure, could be costly at best, and a pose hazards to public safety at worst.

In a panel at YII 2025, Michael Pearson, Digital Delivery Program Manager, Oklahoma Department of Transportation, gave a spot-on summary of why we should automate, and what it can bring. “We’re talking about a major infrastructure projects where people are going to have rapid insight. And based on the creativity of our clients, they come back to us saying, ‘Hey, I think we could use it this way. Can you guys help us do that?’ That kind of thing is really exciting, the kind of collaborative opportunity that it opens up.”

“And also think of it, almost as the mundane but simple automation, creation and background within [project management software], or being able to connect to points that previously needed manual intervention,” said Pearson. “We’ve got people that are able to start to do these things, who are very creative people, you give them the right tools, and they solve the problem.”

“That’s really exciting. You’ve got the power of a new tool put in the hands of creative people, and you’re going to have a new way of doing business. That’s fantastic. I think in terms of innovation, you can spend less time looking for information and more time working and solving problems.”

Beep on!

Be the first to comment