Subsurface utility engineering (SUE) is a field where precision is critical – missteps can lead to costly delays, safety hazards, and even infrastructure failures. Yet, historically, the underground world has been poorly documented, with incomplete or outdated records posing significant challenges. Today, however, technological advancements are reshaping how we locate, visualize, and manage these buried assets.

Subsurface utility engineering (SUE) is a field where precision is critical – missteps can lead to costly delays, safety hazards, and even infrastructure failures. Yet, historically, the underground world has been poorly documented, with incomplete or outdated records posing significant challenges. Today, however, technological advancements are reshaping how we locate, visualize, and manage these buried assets.

In this interview, Peter Srajer, Chief Scientist at ProStar Geocorp, delves into the persistent issues of data preservation, accuracy, and accessibility in subsurface utility mapping. He also discusses how innovations such as machine learning and digital twins are not only transforming SUE practices but also improving decision-making and collaboration in infrastructure projects. The insights shed light on the future of subsurface engineering and the growing importance of standards and regulation as technology continues to evolve.

What are the biggest challenges in accurately locating and visualizing subsurface utilities?

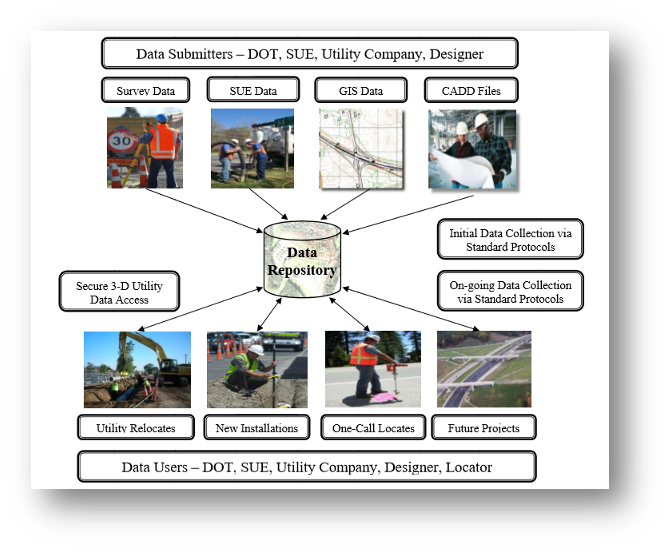

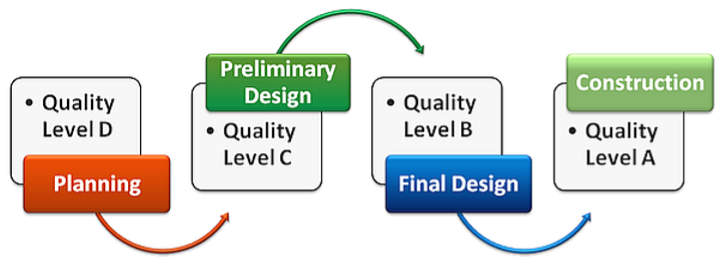

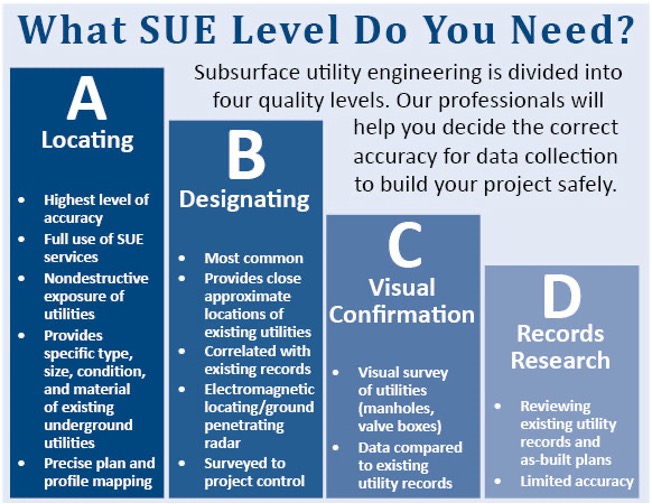

Historically, subsurface utility locations are marked on an as-needed basis—that is, a locate is brought out just before it is required to find features. This has been seen as a necessary but cumbersome process done to satisfy safety and design requirements. It is not viewed as a dataset that is permanent and should be preserved; it is only a mark on the ground. This is curious because these features are permanently in place, and it would be logical to preserve this data. The preservation of collected data is one of the most important challenges. The other issue is the completeness of the data—do you have all of it, how accurate is it, and can you substantiate that accuracy and precision?

Utility network documentation and records may not be collected or maintained consistently. The level of accuracy varies, the data is often outdated, and for abandoned infrastructure, it is usually missing. Preserving the data means implementing the SUE mapping system, which involves preserving the data and the metadata that gives provenance to the accuracy, precision, and completeness of the collected data. Just having the data is not enough; you need to be able to visualize the data and trust it to make decisions.

How does the data collection process for subsurface utilities today compare with previous methods, and how have machine learning and advanced visualization techniques transformed subsurface utility engineering (SUE) practices, particularly in improving decision-making for infrastructure projects?

Data collection of utilities has been greatly enhanced by the availability of higher-accuracy and more cost-effective receivers. Previous collection methods may not have even used positioning systems, relying instead on marks on the ground that were quickly destroyed by operations endemic to a construction site. A better understanding of the accuracy that can be achieved and the widespread use of correction services have helped a great deal. The ease of use and trustworthiness of various RTK satellite and terrestrial services have given field operators the ability to tailor their offerings to the required accuracy.

Visualization advancements are often overlooked as a critical aspect of SUE data. If you cannot understand where the data is spatially and visually, then you will have a very difficult time understanding the importance and value of the data. A spreadsheet of attributes and spatial coordinates may contain all the information and metadata, but it is difficult to visualize how that information ties together with all the features you have collected and what else may be in the area. Machine learning should be understood as a tool, not a panacea. ML is great for finding correlations and insights in your data, but you still need to critically assess what the data is telling you. ML is a fantastic time saver and can reveal a great deal of information that is buried (pun intended), but the expertise lies in the organic interface.

Visualization advancements are often overlooked as a critical aspect of SUE data. If you cannot understand where the data is spatially and visually, then you will have a very difficult time understanding the importance and value of the data. A spreadsheet of attributes and spatial coordinates may contain all the information and metadata, but it is difficult to visualize how that information ties together with all the features you have collected and what else may be in the area. Machine learning should be understood as a tool, not a panacea. ML is great for finding correlations and insights in your data, but you still need to critically assess what the data is telling you. ML is a fantastic time saver and can reveal a great deal of information that is buried (pun intended), but the expertise lies in the organic interface.

The use of recognized industry standards is one of the best ways to instill trust in your collected data. Collecting and storing the metadata for the geospatial position is well understood—everything from the type of correction service, the geoid used, coordinate system, satellite, etc., are all pieces of data that will allow the end user to decide how far they can trust the data. Data from the EM locator tools is equally important and should be collected and stored at the same time as the spatial data.

How is Building Information Modeling (BIM) being integrated with subsurface utility data in Canada, and what are the biggest challenges?

Without subsurface utility data, the BIM model is incomplete. BIM is a process that encourages collaboration between all the disciplines involved in the design, construction, maintenance, and use of buildings. All parties share the same information simultaneously in the same format. If the SUE data is missing, then the collaboration is missing a key dataset. With a centralized database of project data, BIM allows real-time collaboration, reducing errors, conflicts, and delays. SUE data directly impacts the time and cost of delays and errors, and it is a critical safety consideration.

One of the biggest challenges is having confidence in the data’s completeness and accuracy. Many utility owners are reluctant to share their datasets, considering them proprietary or safety-critical to their operations. Addressing these concerns is one of the most time-consuming aspects of assembling a complete dataset and ensuring that the data is of sufficient quality and accuracy. At some point, we need to start assembling the data and keeping it in an accessible and secure manner.

Collecting and siloing data makes it of far less value to anyone working in the area who cannot access it. You may still need to perform a locate, but it is much easier to start from known locations and data than to make assumptions and start from scratch. At that point, newly collected information is used to update the old data, and the quality of the dataset improves as you move forward.

What role do Digital Twins play in the field of subsurface utility engineering, and how do you see this technology evolving in the coming years?

A subsurface digital twin requires a digital twin of underground infrastructure and a geology digital twin. Digital twins of underground infrastructure have become a priority for many jurisdictions around the world. The emphasis for Digital Twins has been on above-ground models, primarily because of the widespread availability of technologies for efficiently capturing high-accuracy location data for above-ground infrastructure. The critical importance of below-ground infrastructure and integrating subsurface infrastructure into the Digital Twin is a method of getting a full and complete dataset.

Can you discuss the importance of standards in the context of subsurface utility data accuracy and regulatory compliance?

There are a number of recognized industry standards used to communicate data accuracy and requirements. ASCE 38-22 and 75-22 can be referenced for further information on accuracy and format standards.

The Canadian standard CSA S-250, revised in 2020, can be used to understand and specify mapping requirements for the recording and depiction of newly installed underground utility infrastructure and related structures. It is intended to promote communication and data sharing throughout the construction lifecycle of projects and the asset’s life. It aims to improve the reliability and accuracy of underground utility infrastructure mapping records and supporting data.

CSA S-250 indicates 3D mapping requirements for the recording and depiction of underground utility infrastructure and related attributes at or below grade. The ASCE 75-22 standard does not apply to above grade infrastructure. It was one of the first standards to specify levels of both horizontal and vertical positional accuracy.

How do you foresee the regulatory landscape for subsurface utility mapping evolving as technologies like AI, machine learning, and Digital Twins become more prevalent?

Regulatory processes are usually lagging indicators in that the rules and enforcement seem to come after the technology is in use and processes and procedures are set up. In that sense, we are in the initial stages of regulating and understanding the use of technologies like AI and Digital Twins, and how they can enhance SUE. There will be a moment when the accepted rules and regulations will be further codified and agreed upon. Existing standards are already in place and are being updated by the industry and regulatory agencies. It is in everyone’s interest to adhere to known standards, as these standards have been developed through careful thought and operational trials. Involving new technologies is expected. The rapid adoption of GNSS in the past, the use of data collectors and locate tools, and the addition of RTK are all examples of technology that, when found to enhance safety and productivity, have been integrated into the field.

Looking ahead, what future advancements in geospatial technology do you anticipate will further enhance the field of subsurface utility engineering, and what impact do you foresee these changes having on industry practices?

There will always be better and new enhancements to existing equipment, more accurate and widespread adoption of satellite correction services, and more reliable communication from the field to the office, enabling real-time data transfer. This leads to safer and more efficient field operations, allowing missing data to be identified in real time rather than later in the office. The ability to share data in real time between field crews and across disparate field sites is taken for granted in urban areas but is a challenge when beginning greenfield remote operations. Communication technology has come a long way, but at the same time, the amount of data we are sending is increasingly large and detailed.

There are ways of consolidating and understanding raw data, and this is where machine learning is valuable. It can consolidate and provide insights by examining large amounts of field data, both new and historical, that would otherwise be too onerous to review. The advancement of easy-to-use field data collectors and the automated checking of data quality standards in the field allow managers to check for accuracy while still on-site. Additionally, machine learning offers insights into what might already be out there by analyzing the information we have. This is an exciting prospect—if that data is available and we understand its provenance, it could save a great deal of repeat work and, in some cases, impact initial design.

There have been several infrastructure failures in the news recently, which raises the questions: What can we learn from these incidents? If you have similar infrastructure, can you assess if similar circumstances are at play? Can machine learning help understand the similarities and probabilities of failure?

Be the first to comment