We are driven by our survival tendency. And that compels us to know or be able to predict the future.

From looking into the crystal ball and predicting the future to locating stars and their positions in the sky, you might be gifted to just write down cryptic scripts about the upcoming events centuries before, just like Nostradamus did.

We always want to be better every time we predict the future.

Now, predictions are geared more towards problem-solving and understanding the evolving patterns in numerous fields. It is more about being prepared and well aware-of the situation before the problem strikes. Or just taking precautions to either mitigate the impact or avoid the problem altogether.

Artificial intelligence (AI), machine learning, and deep learning are the most hyped and talked-about subjects this year. It feels like it has reached its pinnacle. Therefore, I was determined to write about one of them in connection with “wildfires,” which have become among the most threatening ones.

Before reading this article, AI enthusiasts must have heard the saying “Artificial Intelligence, aka AI, is the future now or the latest ongoing trends”, gazillions of times. Just like me, you must have tried and used ChatGPT or its other equivocals.

However, I may claim with certainty that I wrote the article entirely on my own.

What is Deep Learning?

AI, machine learning, and deep learning have been used interchangeably by most of us. Conversely, they are not the same. There are a lot of differences between them.

For instance, AI is training a computer to mimic a human brain and automate the processes of detection, classification, and prediction. Whereas, machine learning is to run the algorithms to achieve AI.

On the other hand, deep learning is a subset of machine learning. It is based on neural networks, which contain code structures (algorithms and logical solutions) that are stored in layers that loosely mimic the functioning of the human brain. An interesting thing about deep learning is that it can adapt to a variety of problems by supplying a specific dataset.

When applying deep learning to spatial information, such as imagery, it falls into four main categories:

- Object detection

- Instance classification

- Image classification

- Pixel classification

In recent years, AI using deep learning has revolutionized the way we interact with and understand our environment and planet Earth. AI-infused workflows in the world of geospatials have uplifted traditional geospatial mapping, data collection, and spatial analysis.

Why is deep learning so crucial? The answer to this question comes with a broad spectrum of applicability to predict complex geospatial problems and various Earth-related challenges. It helps us fill the blind spots that are generated due to incomplete data, data that was not captured or lost, downscaling and getting to the root of the problems, and forecasting by studying patterns and behavior faster than the human mind can do.

Can deep learning be used for wildfire prediction?

The most prevalent symptom of climate change is the increase in the frequency and intensity of forest fires worldwide. And guess what? What we are witnessing is that.

In Canada, wildfire seasons frequently surpass previous records. This year, Canada has seen some of its most grueling wildfire seasons.

For weeks, Canadian news has been flooded with reports on wildfires, dashboards, interactive maps, and satellite imagery that displayed hotspots, air quality, warnings, and evacuation routes. This year, North America has experienced the worst air quality on record, endangering millions of people.

With rapidly advancing technology, why not predict the fire beforehand to reduce its deadly impact? In that scenario, the situation would be very different, and we would get a better grip on those emergency situations.

It is highly agreed among the research community that deep learning algorithms like Convolutional Neural Networks (CNN)-based fire detections are known for their expertise in flame and smoke detection. CNN is a deep-learning algorithm that learns patterns using image analysis and visual hints that go unnoticed by human brains.

Source: https://www.analyticsvidhya.com/blog/2021/05/convolutional-neural-networks-cnn/

There is no need to go behind the mathematics part to learn about how CNN works.

Here, I have picked a very basic example of image recognition. An input image of a bird has been taken here. This image is passed through hidden layers of neurons that have been trained previously on a set of images. CNN is a neural network that consists of neurons that are inspired by the human brain and organized in the form of increasing complexity. The neuron has its own algorithm to process the image, adjust biases, predict, and pass it down to the next layer of neurons. This series of processes is called deep learning.

Ultimately, it creates the most probabilistic image and passes it down to the output layer as a predicted image.

The magnitude of wildfire detection, on the other hand, is far more complex, and as a result, the deep learning model needs to be much more intricate.

Flow chart of image fire detection algorithms based on detection CNNs.

Source: Pu Li and Wangda Zhao via https://www.sciencedirect.com/science/article/pii/S2214157X2030085X

As shown in the picture above, in the case of fire, the object detection CNN provides the capabilities for feature extraction, classification, and region suggestions. First, CNN uses an input image to generate region suggestions through convolution, pooling, etc. Another is region-based item detection. Convolutional layers, pooling layers, fully linked layers, and other techniques are used by CNN to determine whether there is a fire in a proposed location.

In the case of wildfires, there are other important driving factors that have not been included here. This involves data on wind speed, its direction, fire fuel, drought conditions, and irregular topology.

Data complexity and its role in fire detection

Wildfire data is multifaceted and evolving. It involves complex interrelated data like the type of forest fuel (grass, shrubs, small and big trees), the weather in that area (wind conditions, temperature, and humidity), the topology, and the source of fire (human-made lightning). Hence, there is a deluge of data from multiple sources with continuously changing weather conditions.

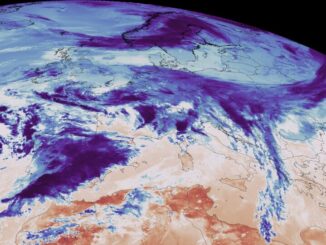

In recent studies, satellite, terrestrial, and aerial technologies have been primarily employed to collect data and detect wildfires early on. The use of short-wave infrared and thermal infrared channels with great temperature sensitivity allows for extensive wildfire detection leveraging satellites. Although they have limited time resolution, sun-synchronous satellites have a high spatial resolution. More importantly, sun-synchronous satellites have limited spatial resolution and low time resolution, making it difficult to detect wildfires in their early stages.

In contrast, geostationary satellites have great temporal resolution but low spatial resolution. Additionally, because of interference from a variety of clouds, satellite data requires extra work to eliminate errors. Canada is planning to have a satellite just for wildfire management in the future.

Small UAVs or security cameras, such as CCTV cameras, on the other hand, have significantly lower operating costs than satellites, greater mobility, adaptable perspective, and resolution, as well as a high potential for early detection of wildfires and the provision of on-site information.

Given that wildfires primarily develop from low levels of smoke, early detection using cameras mounted in high-risk wildfire locations can help more secure the window of opportunity for wildfire extinguishment than detecting the fire with low spatial resolution over a wide area.

Can we make vigilant decisions in the future?

We need to be prepared in the future for the evolving forest fire season. Combating the fire and its devastating impact shows how ready we are.

- Building models with reference to the irregular topologies of the landscape that are most vulnerable to wildfires can help us obtain more clear results.

- Web-based tools should provide support to decision-makers, authorities, communities, and firefighters in providing up-to-date information.

- Models developed using new methodologies are required to be face-to-face with real-time data coming from a variety of real-time sensors: spectrometers, satellites, infrared scanners, lasers, or 3D remote sensing devices.

- Parallel simulation algorithms developed to produce large numbers of simulations in a short period of time improve the quality of results.

- More advanced sensors, breakthrough artificial intelligence prediction techniques, big data algorithm-based modeling, and cutting-edge visualization software will all be used in forest fire research in the future to improve decision-making.

- Forest fires need to be anticipated in the future, and modeling and simulation can be useful in this challenging endeavor.

Reference:

https://www.sciencedirect.com/science/article/pii/S156984322300122X?ref=cra_js_challenge&fr=RR-1

https://www.preventionweb.net/news/power-ai-wildfire-prediction-and-prevention

https://www.sciencedirect.com/science/article/pii/S1569843222002400

https://globalnews.ca/news/9860962/wildfires-prevention-artificial-intelligence/

https://newsroom.carleton.ca/story/forest-fires-planning-modelling-factors/

https://www.analyticsinsight.net/what-is-deep-learning-significance-of-deep-learning/

Be the first to comment