For a man who was not that enthusiastic about reality capture 20 years ago, Jesús Bonet, survey engineer and Hexagon Geosystems’ Director of Sales and Business Development, is so firmly in the reality capture domain, he cannot envision himself focusing on anything else.

Geospatial journalist Mary Jo Wagner caught up with him after the recent buildingSMART International Standards Summit to get his unique view on reality capture’s trajectory, from “problematic dance partner” to capable, if not essential, enabler. In a wide-ranging discussion, we learn why reality capture has captivated him for so long, why the technology has had a meteoric rise, why 2017 was such a key year, why workflows are a big deal, and what he thinks will be the ultimate reality capture disruptor.

MJW: You’ve spent nearly your entire 20-year career in reality capture (RC). What is it about this technology sector that has kept you so engaged?

JB: In the 21 years I’ve been working in this industry, no one has ever asked me that question. Something I love about reality capture technology is that it allows you to touch a lot of different applications and segments. And this is very nice because you can develop yourself in many different ways. Being in an industry with such transformative power makes it hard to think of me doing anything else. But, I didn’t feel that way at the start.

At the beginning of my career, I was a regional sales manager for eastern Spain and I was working with GNSS, total stations, machine control and laser scanning. The scanning technology was very complicated. The devices were very big, very heavy, and difficult to carry, they had a lot of cables, and the software wasn’t super smooth. It was also incredibly expensive. It was really only for very high level, select customers like universities. So, it wasn’t easy to sell laser scanning. In 2009, the owner of my company offered me a promotion to become the sales manager for all of Spain, but, only for laser scanning. No one wanted to take care of laser scanning at that time. So, I wasn’t enthusiastic about having to dance in the dark with the problematic partner. But, the owner came from Brazil where they were using this technology a lot with important oil and gas operators and shipbuilding companies. He was confident the technology would grow. And he was confident in me. So I took it on.

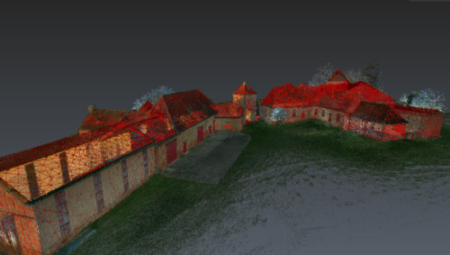

At the beginning it was a bit difficult, but then I realised how multi-disciplined the technology is and how much you could do with it. I knew I could sell that versatility to customers. In November/December 2009, I made my first Leica ScanStation 2 sale to Centro Tecnológico del Mármol (Region of Murcia), a sort of research centre working on quarries, mines, and everything related to marble. The scanner was fast enough, with good accuracy, and most importantly, it had sufficient range for scanning in quarries and open pit mines. With better education and understanding of the technology, scanning sales became more plentiful. As the technology improved and expanded it became easier to prove the business case and now, with all the facets of reality capture––mobile mapping, autonomous laser scanning–– versatility is still one of the main unique selling points of the industry today. Nothing compares to all the reality capture technology that we can offer to the market, and that flexibility enables me to sell to architects, archeologists, any kind of engineer, surveyors and so many others. Getting to learn about all these different sectors, and how they work and what their challenges are is a fascinating and fulfilling part of my job.

So, although I didn’t embrace laser scanning with enthusiasm right away, now I have nothing but enthusiasm for it. Reality capture has captivated me to the extent that I’ve not wanted to be involved in anything else.

MJW: When you first began your RC career, did you envision that the technology would advance as quickly as it has and would be as ubiquitous as it’s becoming today? To what do you attribute its proliferation?

JB: It became clear to me that 3D technology and the amount of data you could quickly capture would be the future. But the problems were the price, the complexity of the solution and how to use the software. You really needed experts processing the information. However, the promise of 3D technology was strong. So, I expected the technology would improve, but definitely not so fast. What we have done in less than 20 years is just impressive. How we did it 20 years ago and how we do it now is mind blowing.

Its proliferation has been so fast because of the bold engineering to produce smaller and lighter devices and advance the software. We’ve created hardware that is more attractive and easier to use so you don’t have to be an expert to use our sensors. Almost anyone can use them. They’re small, fast, accurate, and most importantly, they solve a lot of customers’ problems. The big challenge of reality capture technology has always been with the software and how to handle all this data. What do we do with millions of points and millions of images? So, as important as it has been to develop nice hardware with strong features, the biggest asset to growing reality capture has been in producing easy to use software solutions with super powerful algorithms for classifying point clouds, point cloud cleaning, and tools to fit 3D models in the point cloud. We are overcoming the historically big bottleneck of data processing with machine learning, artificial intelligence, and new software algorithms.

“Facility management and the enterprise asset management world will definitely be disrupted by reality capture.”

MJW: It’s fair to say that the development of terrestrial laser scanning (TLS) 20 years ago was one of the biggest transformative milestones in the geomatics industry. What would you say have been the top three RC milestones since?

JB: I think number one is sensor integration. At first, we just had laser scanning. But then it expanded to other technologies like mobile mapping and now we have autonomous mobile solutions. That has given us the ability to combine terrestrial laser scanners with data coming from a UAV, or a total station or mobile mapping system. That versatility is fantastic.

Number two is the advent of SLAM technology to enable dynamic scanning. That means you no longer have to set your tripod on a point and scan from a static, or one location. Now you can have the laser scanner in your hands and start walking.

Number three is the emerging milestone of autonomous solutions with limited human intervention. This, without a doubt, is a game changer. We may not be ready today to be fully autonomous, but we are in the peak and when it arrives, it’ll arrive from Hexagon. I have no doubt about that.

MJW: Although the business case for TLS took some time to evolve, the adoption of mobile mapping, mobile scanners and interest in autonomous scanning has been quicker and wider across many industries. Why do you think users have been faster to embrace and integrate these other RC approaches?

JB: Good question. When we released mobile mapping technology, I think TLS was still about 95 percent of the reality capture revenue. I analyse every year how TLS, mobile mapping and autonomous solutions evolve, and it’s very interesting to see how fast mobile mapping and SLAM technology are growing. I attribute this consistent growth to one factor: scalability. If you have to scan your house, TLS is probably still the best technology to do it. But now imagine you have to scan 500 km of railway. Are you going to send one person with one laser scanner and place it every 10 m to scan the whole length of 500 km? Forget it. Mobile mapping technology, which integrates LiDAR, GNSS, cameras and an IMU, provides the ability to scan in movement. Now you can drive that 500 km at regular traffic speeds and acquire all the data in a few hours. That’s a simple example of how productivity and efficiency have attracted users to mobile mapping.

SLAM is something similar. For example, the BLK2GO. If you have to scan your 120-square-metre house, it’ll take about 30-40 minutes to scan that with TLS. With the BLK2GO, you can probably capture it in five minutes. You can argue that if you have to scan only one house, the time difference is not that big. But imagine if you’re a big real estate company that needs to scan thousands of houses. If in one day you can scan 20 houses instead of two, the productivity and efficiency of mobile scanning makes a huge difference.

The ability to complement and integrate different reality capture sensors to suit projects brings tremendous value to users and that’s a business case and ROI they understand.

MJW: In which markets has RC been the biggest disruptor and how have those industries benefitted?

JB: I would say reality capture can be a disruptor for any kind of segment or application. It certainly has been for our strong legacy segment of surveying and construction. And that’s again because of the amount of data you’re capturing and the fast speeds in which you can capture.

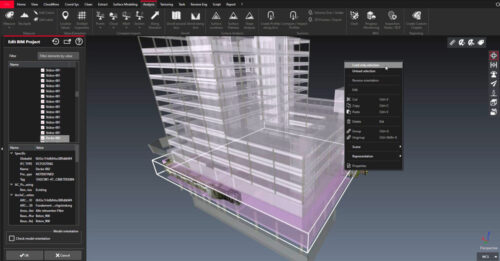

Probably the biggest opportunity or application for reality capture right now is within the Building Information Modelling (BIM) industry: Scan-to-CAD and Scan-to-BIM for design, and construction verification for construction.

Facility management and the enterprise asset management world will definitely be disrupted by reality capture. For example, utilities. Utilities are awash in assets. Reality capture provides huge value in providing documentation and digital twins of facilities to help water managers, gas managers, electricity managers, and telecommunication operators efficiently and effectively operate and maintain their assets. Large industrial facilities like oil refineries are another example. Can you imagine how many important assets, elements and equipment inside the refinery have to be monitored, checked and maintained? You need a digital twin for that. You need to visualise the assets if you want to manage them. Reality capture enables you to capture the reality to operate and maintain that reality. Imagine how many buildings we have in the world that don’t have any documentation. There is a huge number of structures––buildings, bridges, tunnels––for which there is no documentation. And if you want to maintain these assets, you have to be able to view the information. That’s why reality capture will be disruptive for the facility and asset management.

The third segment is the real estate world. Houses are to be sold, purchased, or rented forever because they are a human need. To make this transaction you need to be able to show the property to the potential buyer or renter. This is a huge investment so a 2D floor plan should be the minimum visual available. But why not a 3D model, or render or spherical image? In some countries, providing all of those visuals are mandatory by law. For example, in the Netherlands, there is an online public platform called Funda where every house that is for sale or for rent must be available on this platform. So if you want to buy a house in Amsterdam, you click on one you’re interested in, and by law, you’ll see photos of the property and you’ll also see a 2D floor plan, a 3D model of the house or apartment and you’ll have spherical images of the entire interior, allowing you to take measurements and move around the flat. All of this is mandatory and it’s all from reality capture. This is a new segment with a completely different user base. So this shows the amount of opportunities that reality capture is opening to us.

MJW: Software is where a lot of the magic happens in transforming 3D data into valuable intelligence, yet it’s often overshadowed by the gloss and gadgetry of the physical hardware. This is particularly true for RC. How has software transformed the maturation of RC and its capabilities and how do you see it further shaping reality in the future?

JB: That’s an excellent question. We’ve always had an imbalance in reality capture where hardware was considered superior to software. It’s very nice to see that we continue to improve the hardware, but the big improvement is coming from the software. I’m not saying we’re aligned with the hardware. I believe we still need a bit more on the software. But this “bit more” is already coming with machine learning, AI, and automatic procedures to produce 3D models and identify assets.

Selling software is often more complex than selling hardware, particularly reality capture software, which is all about workflows. It’s about understanding the problem you are resolving for the customer. And a lot of that is driven by software. You need to understand what the customer’s problem is, what their pain is, and which technology is best to resolve that. Maybe a customer’s problem is producing a BIM. You need to describe to the customer the best workflow options to resolve that issue, from acquiring the data, to the specific software pieces needed to process the data, to exporting the data to enable them to work in their BIM platform, and to finally producing a BIM. When you understand workflows, you will be successful.

Another component that’s important for us is cloud solutions. Reality capture has historically been a complex piece of software that you had to install on your laptop, use it locally to process the data and then share the 3D information with someone. Today, the work environment is more and more about collaboration, sharing information with different stakeholders, and working in a centralised mode. This is what our Reality Cloud Studio, powered by HxDR, solution offers. The way we see the future is, you are working in the field, you are capturing the reality in the field, and during the acquisition you are able to upload the data to a server and all the processing and transformation happens in the cloud––importing the data, registering it, cleaning it, and then creating deliverables like 3D models or 2D sections. All of that data transformation is happening while that person is still in the field capturing data. It’s fantastic.

“We may not be ready today to be fully autonomous, but we are in the peak and when it arrives, it’ll arrive from Hexagon.“

MJW: When did you see the RC user base expand beyond the domain of surveyors and engineers and, as a surveying engineer and passionate RC advocate, what was that like to witness? Who are some of the more unique RC customers you are aware of?

JB: I would say 2017. For me, that’s when the user base changed from domain experts to everyone. In the early years, we were selling scanners to experts, high-level engineers, high-level IT people because the software was complex, and the hardware was difficult to use. This dramatically changed with the introduction of the BLK360 in 2017. That’s when I believed reality capture would be for everyone because suddenly, we had reached the phase where we had small, simple, easy-to-use devices with intuitive and user-friendly software. That was reality capture’s big bang. It was an incredibly exciting time, and it’s opened a number of new markets for us.

One quite unique market is the visual effects world and media and entertainment. Whether that’s selling scanners to Netflix for the Squid Game TV series, or selling to Lucasfilm for a Star Wars film or working with video game companies, this is a novel category of customer. Utilities, including water, gas, electricity and telecommunications, are a new customer. Real estate. And in the industrial world there are a lot of special cases like nuclear energy, ship building, pharmaceutical and chemical plants, manufacturing, automotive and aerospace. It’s very diverse. And one sector that we are getting more and more involved in is public safety. We are selling a lot of technology to police forces, firefighters, the army and private security.

MJW: Hexagon is focused on Smart Digital Reality™, a term the group coined to advance the utility and potential of digital twins. How do Hexagon’s RC technology and platforms align with this goal and are you targeting the development of intelligent digital twins because you see them as the next value-added progression for RC users?

JB: That’s another good question. Smart Digital Reality (SDR) is a primary theme at Hexagon now. And in my words, SDR means simply connecting the physical world with the digital world. How do we do that? Through a streamlined, seamless data loop. By using our hardware sensor technology, we can capture the reality, produce a huge amount of data, use our software solutions to transfer this data into something that other software solutions can read, understand and apply, and then loop back to the physical world to make decisions based on the digital information we have.

What is Hexagon Geosystems’ contribution to the SDR? We contribute the most important part: capturing the reality. Providing the digital replica of the physical world and the digital information is the domain of Geosystems’ tools. We have a super important contribution to this informational loop. For example, returning to that example of the 500-km railway, imagine Deutsche Bahn in Germany or SNCF in France and their railway managers who need to capture 500 km of the railway for operations and maintenance. They need to manage the network to understand if they need to change an electric cable or change a pylon or repair track components. By using reality capture they can quickly capture that railway and all its corresponding elements and create a digital twin of the entire 500-km infrastructure. Then they can connect that data to their facility management software to know the location of every asset, its condition, and its service history. With that information, they can create work orders for maintenance and easily assign work to their field technicians. They can better run the operations and maintenance of the railway. This is just one example of how valuable intelligent digital twins are for many plant, facilities, aerospace, and automotive customers that use reality capture for these types of operations and maintenance.

“When you understand workflows, you’ll be successful in reality capture.”

MJW: You’ve mentioned that Hexagon is focused on integrating RC deliverables such as digital twins with enterprise asset management to enhance operations and maintenance, but users have already been smartening their digital twins with asset data to support those tasks. Are you targeting this ability because it’s been a challenging or laborious process to date? What are the advances you see that will help expedite those intelligent connections more effectively?

JB: Users have been able to smarten digital twins with business-critical data like assets but it hasn’t been easy or efficient to do. It’s required a lot of manual, tedious labor to geotag each asset. In addition, there is some complexity around the digital twin itself.

In its simplest form, a digital twin is a replica of something physical, but it can take on many forms. If I need a digital twin of my house or my office, it can be 3D or 2D. It can be pictures only or have geometry. So, there are different options and definitions of a digital twin. A 2D floor plan can be the starting point of a digital twin because it’s a digital representation of something real. And I can have links in this 2D floor plan that refer to a particular physical component in reality. For example, if I’m creating a 2D floor plan of my flat, I have a door here that is represented on the plan and I can connect the coordinate for the door object with a database that will tell me what kind of door it is, its height and the name of the provider that made the door. All this metadata is something that I can connect. I can connect a 2D floor plan with a 3D drawing or a 3D model with a BIM. But as I said, the only way to do this has been by manually creating links through geotagging.

That’s about to change, however. Hexagon is working on an autonomous solution for auto tagging, which I think is the future.

What we envision––and this is my dream––is you scan in the field, upload the data to the cloud, automatically create a 3D model in the cloud and use Hexagon’s algorithm to automatically identify all the assets in the model. Imagine how fast that workflow is from capturing data to having an intelligent digital twin without human intervention. This is happening now, and this will be the disruptor.

MJW: How do you see autonomous scanning impacting RC as we know it today?

JB: Today we are talking about autonomy, but we are not yet at full autonomy. We are moving towards that. Using a Boston Dynamics robot with a BLK ARC mounted on top for scanning dangerous or risky areas with minimal human intervention is a good example of this. If you go to a nuclear plant and you need to scan in a high radioactivity area, you can’t do that for obvious health and safety reasons. But you can send a robot. And in the end, if the robot dies because of radioactivity, the nuclear plant can afford that. Another example is an underground mine where some areas are forbidden to enter. You can dispatch a robot. These types of remote-controlled, nearly autonomous applications make sense. They also make good sense if we are talking about repetitive tasks because you can define the trajectory of the robot one time and it’ll follow it every time.

What I envision for full autonomy is having important facilities like nuclear plants, or oil and gas plants, continuously monitored by autonomous sensors. A robot with a BLK ARC, or a drone with BLK2FLY consistently and routinely scanning. And not only with our technology but combined with other technologies like gas detectors or radioactivity detectors. I think this is the way forward with autonomy.

MJW: We’ve gone from the first bulky, heavy TLS to 600-gram mobile laser scanners you can hold in your hand. Or mount on a robot. What do you think is next in RC?

JB: In general, I think we’ll continue to see a focus on improving workflows. I think we can expect more automatism in software solutions with AI and machine learning algorithms, and a continued march towards cloud solutions. We can also expect more consistent hardware solutions, finding the right balance between size/weight, accuracy/resolution and robustness/reliability. And finally, we certainly should expect improvements on SLAM technology for dynamic acquisitions.

Broadening out to five or ten years, I look to a futuristic scene from the 2012 film Prometheus. There is one particular sequence in the film that impressed me a lot because when I saw it, I thought, that is the future for reality capture. In the film, they arrive to a new planet with underground caves, and instead of sending people to explore the caves, they took two small balls, threw them up in the air, and the balls started flying, emitting red lasers, and scanning. They were navigating inside the caves via the scanning data sent in real time. That navigation capability is already here with SLAM. The balls were airborne, which is technology we have today––the drone, the BLK2FLY. The balls were scanning autonomously, which is technology we have today. And they were sending the data directly to the server and colleagues were viewing what was being scanned in real time, which is also technology we have today. The difference is they were putting everything together in a single device, a ball. This is where I see the future of reality capture. More connected technology that works autonomously and enables real-time data exchange. Today, we still haven’t connected all the integration dots. We’re still working in silos. But we’re almost there.

Be the first to comment